The Role of AI in Preventing Cybersecurity Breaches is no longer a futuristic fantasy; it’s the urgent reality of our hyper-connected world. Cyber threats are evolving at breakneck speed, deploying increasingly sophisticated attacks. Traditional security measures, while crucial, are often playing catch-up. This is where Artificial Intelligence steps in, offering a powerful arsenal of predictive and proactive defenses against the ever-growing tide of digital dangers. From identifying zero-day exploits to automating incident response, AI is transforming the cybersecurity landscape, helping organizations stay one step ahead of the bad actors.

This deep dive explores how AI is revolutionizing threat detection, vulnerability management, and incident response. We’ll examine AI-powered tools and techniques, analyze their effectiveness, and even touch upon the ethical considerations that come with deploying such powerful technology. Get ready to unravel the fascinating intersection of AI and cybersecurity – it’s a story of innovation, adaptation, and the ongoing battle for digital safety.

AI-Powered Threat Detection and Prevention

AI is revolutionizing cybersecurity, offering a powerful new arsenal against increasingly sophisticated cyberattacks. Traditional security methods often struggle to keep pace with the evolving tactics of malicious actors, but AI’s ability to learn and adapt makes it a crucial component in building robust defenses. Its power lies in its capacity to analyze vast amounts of data, identifying patterns and anomalies that would be impossible for humans to spot in a timely manner.

AI algorithms analyze network traffic by examining various data points, including packet headers, payload contents, and network flow patterns. They look for deviations from established baselines, such as unusual communication patterns, unexpected data transfers, or attempts to access unauthorized resources. Machine learning models, particularly those based on deep learning, excel at identifying subtle anomalies that might indicate malicious activity. These algorithms are trained on massive datasets of both benign and malicious network traffic, enabling them to distinguish between legitimate and illegitimate actions with increasing accuracy.

AI’s Role in Detecting Zero-Day Exploits and Advanced Persistent Threats

Zero-day exploits and Advanced Persistent Threats (APTs) represent significant challenges for traditional security systems. Zero-day exploits leverage previously unknown vulnerabilities, making signature-based detection ineffective. APTs, characterized by their stealthy and long-term nature, often bypass traditional perimeter defenses. AI provides a powerful countermeasure. By analyzing network behavior in real-time and identifying anomalies based on learned patterns, AI can detect these threats even before signatures are available. Anomaly detection techniques, combined with behavioral analysis, are crucial in identifying the subtle indicators of compromise often associated with APTs. For example, AI can detect unusual login attempts from unexpected geographical locations or identify unusual data exfiltration patterns.

Examples of AI-Driven Intrusion Detection Systems

Several companies offer AI-powered intrusion detection systems (IDS) that leverage machine learning to improve threat detection accuracy and speed. These systems often incorporate various AI techniques, including deep learning, natural language processing, and reinforcement learning, to enhance their capabilities. For example, some systems use deep learning models to analyze network traffic and identify malicious patterns, while others use natural language processing to analyze security logs and identify potential threats. The effectiveness of these systems varies depending on the specific algorithms used, the quality of the training data, and the complexity of the threats they are designed to detect. However, independent evaluations have demonstrated significant improvements in detection rates and reduced false positives compared to traditional signature-based IDS.

Comparison of Traditional and AI-Based Security Methods

| Feature | Traditional Security Methods | AI-Based Security Methods |

|---|---|---|

| Detection Speed | Relatively slow; relies on known signatures | Much faster; can detect anomalies in real-time |

| Accuracy | High for known threats; high false positive rate for unknown threats | High for both known and unknown threats; lower false positive rate |

| Adaptability | Requires frequent updates to signatures | Adapts automatically to new threats through machine learning |

| Scalability | Can be challenging to scale to handle large volumes of data | Generally more scalable due to automated analysis |

AI in Vulnerability Management: The Role Of AI In Preventing Cybersecurity Breaches

Source: cienteinfotech.io

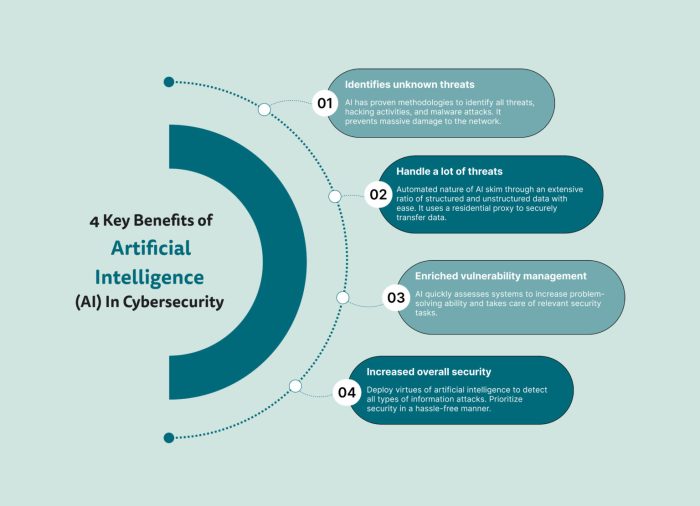

The digital landscape is constantly evolving, with new vulnerabilities emerging daily. Traditional vulnerability management methods struggle to keep pace with this rapid change, leading to increased security risks. Artificial intelligence (AI) offers a powerful solution, automating and enhancing various aspects of vulnerability management, leading to faster identification, prioritization, and remediation of security weaknesses. This allows security teams to be more proactive and efficient in protecting their organizations from cyber threats.

AI significantly accelerates and improves the accuracy of vulnerability scanning and assessment. Manual processes are time-consuming and often miss critical vulnerabilities. AI-powered tools, on the other hand, can analyze vast amounts of data from various sources—including network traffic, system logs, and code repositories—to identify potential weaknesses far more quickly and comprehensively than human analysts. This proactive approach significantly reduces the window of opportunity for attackers to exploit vulnerabilities.

Automated Vulnerability Scanning and Assessment

AI algorithms utilize machine learning techniques to analyze network configurations, software code, and system logs, identifying patterns and anomalies indicative of vulnerabilities. These algorithms can learn from past security incidents and adapt to new threats, continuously improving their accuracy and efficiency. For instance, an AI-powered scanner might detect a known vulnerability in a specific version of software deployed on a server by analyzing its configuration files and comparing them against a database of known vulnerabilities. This automated approach ensures that vulnerabilities are detected promptly, reducing the time it takes to remediate them.

Vulnerability Prioritization Based on Risk and Impact

AI plays a crucial role in prioritizing vulnerabilities based on their potential impact and the likelihood of exploitation. This is done by analyzing factors such as the severity of the vulnerability, the sensitivity of the affected assets, and the presence of active exploits. By prioritizing high-risk vulnerabilities first, security teams can focus their efforts on the most critical threats, maximizing their impact on overall security posture. For example, an AI system might prioritize a vulnerability that allows remote code execution on a database server over a vulnerability that only affects a less critical application. This risk-based approach ensures that resources are allocated effectively.

AI Tools for Vulnerability Remediation and Patching

Several AI-powered tools are available to assist in the remediation and patching of vulnerabilities. These tools can automate the process of applying security patches, configure security settings, and even generate code to fix vulnerabilities. For example, some tools can automatically generate scripts to patch known vulnerabilities in specific software versions, while others can analyze code to identify and suggest fixes for potential weaknesses. This automation significantly reduces the time and effort required for remediation, minimizing the window of vulnerability. One example of such a tool is a platform that uses AI to analyze code repositories and automatically suggest code changes to fix vulnerabilities, streamlining the development process and improving security.

AI Integration into a Vulnerability Management Program

A typical workflow integrating AI into a vulnerability management program involves several stages. First, AI-powered scanners continuously monitor systems and applications, identifying potential vulnerabilities. Then, AI algorithms analyze the identified vulnerabilities, prioritizing them based on risk and impact. Next, automated remediation tools are employed to patch or fix the prioritized vulnerabilities. Finally, the entire process is continuously monitored and improved using feedback loops and machine learning, enhancing the system’s ability to identify and respond to emerging threats. This closed-loop system allows for continuous improvement and adaptation to new threats, ensuring robust and proactive vulnerability management.

AI for Security Information and Event Management (SIEM)

Traditional SIEM systems struggle to keep up with the sheer volume and velocity of security data generated by modern organizations. They often rely on predefined rules and signatures, leaving them vulnerable to sophisticated, zero-day attacks that don’t match known patterns. This is where AI steps in, dramatically enhancing the capabilities of SIEM to detect and respond to threats more effectively.

AI augments SIEM systems by automating tasks, improving accuracy, and providing insights that are impossible for human analysts to uncover manually. By leveraging machine learning algorithms, AI can analyze vast quantities of data from diverse sources – network devices, endpoint security tools, cloud platforms, and more – to identify subtle patterns and anomalies indicative of malicious activity. This allows for proactive threat detection, faster incident response, and a significant reduction in the time it takes to contain security breaches.

AI-Enhanced Threat Detection and Response in SIEM

AI algorithms, specifically machine learning models, analyze security logs and events to identify suspicious activities that would be missed by traditional rule-based systems. For instance, AI can detect unusual login attempts from unfamiliar geographic locations, unexpected data exfiltration patterns, or subtle variations in network traffic that signal a compromise. This proactive detection significantly reduces the window of opportunity for attackers. Furthermore, AI can automate incident response by initiating actions like blocking malicious IPs, quarantining infected systems, or escalating alerts to security teams based on predefined thresholds and risk assessments. This automated response capability minimizes the impact of security incidents and speeds up recovery time.

Correlation of Security Events Across Multiple Sources

A key advantage of AI in SIEM is its ability to correlate security events across various sources. Traditional SIEMs often struggle to integrate and analyze data from disparate systems, resulting in fragmented insights. AI, however, can seamlessly integrate and analyze data from multiple sources, identifying relationships and patterns that would otherwise remain hidden. For example, AI might connect a suspicious login attempt from a specific IP address with unusual file access activity on a particular server, revealing a coordinated attack that would be missed by analyzing each event in isolation. This holistic view of security events provides a more comprehensive understanding of the threat landscape and facilitates more effective threat hunting.

Examples of AI-Powered SIEM Features

AI-powered SIEM solutions offer a range of advanced features that enhance security posture. Automated incident response, as mentioned earlier, is a critical capability. Threat hunting is another key feature, where AI algorithms proactively search for malicious activities based on identified patterns and indicators of compromise (IOCs). Anomaly detection is crucial; AI can identify deviations from established baselines, flagging unusual behaviors that might indicate a breach. For example, a sudden spike in database queries from an unusual source or an unexpected increase in outbound network traffic could trigger an alert. Finally, AI-driven prioritization of alerts helps security teams focus on the most critical threats first, improving efficiency and reducing alert fatigue.

Key Metrics Improved by AI in SIEM

AI significantly impacts several key metrics within a SIEM system. Here are some examples:

- Mean Time to Detect (MTTD): AI significantly reduces the time it takes to identify a security incident.

- Mean Time to Respond (MTTR): Automated incident response capabilities powered by AI shorten the time required to contain and resolve a security incident.

- False Positive Rate: AI helps reduce the number of false alarms, allowing security teams to focus on genuine threats.

- Security Analyst Productivity: Automation and prioritization of alerts free up security analysts to focus on more complex tasks.

- Overall Security Posture: Improved threat detection and response capabilities lead to a stronger overall security posture.

AI in User and Entity Behavior Analytics (UEBA)

Source: thecyberexpress.com

AI’s role in thwarting cyberattacks is huge, constantly learning and adapting to new threats. This predictive power isn’t limited to security; it’s revolutionizing other sectors too, like retail, where AI-driven insights are transforming the customer experience, as detailed in this insightful article: How AI and Data Analytics Are Changing the Retail Landscape. Ultimately, the same analytical prowess that optimizes retail operations can also fortify our digital defenses against increasingly sophisticated cyber threats.

UEBA, or User and Entity Behavior Analytics, is like having a super-powered detective constantly monitoring your company’s digital activities. It uses the power of artificial intelligence to analyze user and system behavior, spotting anomalies that could signal a security breach before it even happens. Think of it as a digital immune system, constantly learning and adapting to identify threats.

UEBA leverages AI to identify insider threats and malicious user activities by creating detailed profiles of normal user behavior. These profiles act as baselines, allowing the system to quickly identify deviations that might indicate something suspicious is afoot. This is crucial because often, the most damaging breaches aren’t caused by external hackers, but by compromised or malicious insiders.

AI Techniques for Establishing Baselines of Normal User Behavior

AI in UEBA establishes baselines using machine learning algorithms, specifically focusing on pattern recognition. These algorithms analyze vast amounts of data, including login times, access patterns, data accessed, and even the types of commands executed. Over time, the AI learns what constitutes “normal” behavior for each user and system. This isn’t just a simple average; the AI accounts for variations due to time of day, day of the week, and even project deadlines, building a sophisticated understanding of typical activity. The more data the system processes, the more accurate and nuanced these baselines become.

Examples of AI Detecting Anomalous Behavior

AI-powered UEBA can detect various anomalies that might indicate a potential security breach. For instance, if an employee who typically only accesses sales data suddenly starts downloading sensitive financial information at odd hours, the system would flag this as unusual activity. Similarly, a sudden surge in login attempts from an unfamiliar location, unusual file access patterns (like mass downloading of data), or even changes in an employee’s usual communication patterns (like sending large volumes of data to an external address) would trigger alerts. These seemingly small deviations, invisible to the human eye, can be significant indicators of malicious activity.

Scenario: Preventing a Data Breach with AI-Powered UEBA, The Role of AI in Preventing Cybersecurity Breaches

Imagine Sarah, a mid-level accountant, has her credentials compromised through a phishing attack. The attacker, unbeknownst to Sarah, gains access to her account. A traditional security system might miss this, as the attacker is using Sarah’s legitimate credentials. However, an AI-powered UEBA system would immediately detect anomalies. The system would notice unusual activity: access to databases Sarah typically doesn’t interact with, downloads of large amounts of financial data at unusual times, and attempts to transfer data to external servers. These deviations from Sarah’s established baseline would trigger alerts, allowing security teams to immediately investigate, block the attacker’s access, and contain the potential data breach before significant damage is done. The system might even automatically quarantine Sarah’s account, preventing further unauthorized access while the situation is investigated.

AI-Driven Security Automation and Orchestration (SOAR)

Imagine a security team effortlessly juggling hundreds of alerts, instantly prioritizing critical threats, and responding with lightning speed. That’s the power of AI-driven Security Orchestration, Automation, and Response (SOAR). It’s not about replacing human expertise, but supercharging it, allowing security professionals to focus on strategic tasks while AI handles the heavy lifting of repetitive, time-consuming processes.

AI streamlines security operations by automating repetitive tasks, freeing up human analysts to focus on more complex threats. This automation extends across various security functions, from threat detection and incident response to vulnerability management and patching. By automating these routine tasks, SOAR solutions significantly reduce the workload on security teams, improving efficiency and reducing the risk of human error. This allows for quicker response times and a more proactive approach to security management.

AI Improves Incident Response Times Through Automated Workflows

AI-powered SOAR platforms automate incident response through pre-defined workflows, drastically reducing the time it takes to contain and resolve security incidents. These workflows are triggered automatically upon detection of a threat, initiating a series of actions such as isolating infected systems, blocking malicious IP addresses, and launching forensic investigations. The speed and precision of these automated responses are crucial in minimizing the impact of breaches. For example, a ransomware attack can be contained much faster, limiting the spread of malware and the amount of data compromised. This speed is often the difference between a manageable incident and a catastrophic breach.

Examples of AI-Powered SOAR Platforms and Their Capabilities

Several vendors offer AI-powered SOAR platforms, each with its unique strengths. For instance, IBM Resilient offers a robust platform with advanced automation capabilities, including AI-driven threat intelligence integration. Palo Alto Networks Cortex XSOAR provides a comprehensive solution that integrates with a vast ecosystem of security tools. These platforms often include features like automated playbook creation, AI-driven threat prioritization, and real-time threat intelligence feeds, enhancing their overall effectiveness. The specific capabilities vary between platforms, but the core function of automating security responses and improving incident response times remains consistent.

Illustrative Diagram of AI Integration in a SOAR System

Imagine a diagram showing various security tools (SIEM, endpoint detection and response (EDR), firewall, etc.) connected to a central SOAR platform. Arrows illustrate the flow of data and automated actions. The AI engine sits at the heart of the SOAR platform, analyzing data from these various sources, identifying threats, prioritizing incidents based on severity and risk, and triggering automated responses according to pre-defined playbooks. The system visualizes the seamless integration and automation, showcasing how AI orchestrates the entire security response process, making it faster and more efficient. The diagram would highlight the AI’s role in intelligent threat hunting, automated investigation, and coordinated remediation across multiple security layers. This visual representation would effectively communicate the synergistic relationship between AI and traditional security tools within the SOAR framework.

AI and Cybersecurity Workforce Augmentation

The cybersecurity landscape is constantly evolving, with threats becoming more sophisticated and frequent. This puts immense pressure on security teams, who often struggle to keep up with the sheer volume of alerts and incidents. AI is emerging as a crucial tool to alleviate this burden, empowering human analysts and enabling them to focus on higher-level strategic initiatives. By automating repetitive tasks and providing advanced analytical capabilities, AI significantly enhances the effectiveness and efficiency of cybersecurity teams.

AI assists security analysts by automating the tedious process of analyzing massive datasets, identifying patterns indicative of malicious activity, and prioritizing alerts based on severity and risk. This frees up analysts to concentrate on investigating complex threats that require human judgment and expertise. Instead of sifting through countless logs manually, AI can pinpoint suspicious behaviors and potential breaches, dramatically reducing response times and minimizing damage. Furthermore, AI-powered systems can provide context-rich information, helping analysts understand the full scope of an incident and formulate effective remediation strategies.

AI-Driven Threat Investigation and Response

AI significantly accelerates incident response by automating threat detection and providing analysts with immediate, actionable insights. For example, an AI-powered security information and event management (SIEM) system can automatically detect a surge in login attempts from an unusual geographic location, flagging it as a potential brute-force attack. This allows analysts to quickly investigate the incident, block malicious IP addresses, and prevent further compromise. Moreover, AI can correlate data from multiple sources to identify intricate attack patterns that might otherwise go unnoticed, enabling proactive threat hunting and preventative measures. Sophisticated AI systems can even predict future attacks based on historical data and emerging threat intelligence, enabling proactive security posture adjustments.

AI-Facilitated Workload Reduction for Security Teams

The sheer volume of security alerts generated by traditional systems often overwhelms security teams. AI addresses this by prioritizing alerts based on severity and likelihood of a genuine threat, filtering out the noise and focusing analysts’ attention on the most critical issues. This allows security teams to focus on strategic initiatives such as developing and implementing robust security policies, conducting security awareness training, and improving overall security posture. By automating routine tasks such as vulnerability scanning and patching, AI frees up valuable time and resources, allowing security teams to concentrate on more complex and strategic aspects of cybersecurity.

Examples of AI Tools Providing Actionable Insights

Several AI-powered security tools provide analysts with actionable insights and recommendations. For example, Threat intelligence platforms leverage AI to analyze vast amounts of threat data from various sources, identifying emerging threats and vulnerabilities. These platforms can provide real-time alerts, customized threat intelligence reports, and proactive recommendations for mitigating risks. Similarly, vulnerability management platforms use AI to prioritize vulnerabilities based on their severity and exploitability, enabling security teams to focus on the most critical issues. AI-powered security orchestration, automation, and response (SOAR) platforms streamline incident response by automating repetitive tasks and coordinating security tools, significantly reducing the time and resources required to contain and remediate security incidents.

Visual Representation of AI and Human Analyst Collaboration in Threat Analysis

Imagine a visual representation showing a network map with nodes representing various systems and data flows. Highlighted nodes indicate suspicious activity detected by AI. These highlights are color-coded based on severity (red for critical, yellow for warning, green for low risk). A separate panel displays a timeline of events, with AI-generated annotations highlighting key actions and potential threats. Human analysts interact with this visualization, drilling down into specific events, reviewing AI-generated reports, and validating findings. A chat window allows analysts to query the AI for further information or to provide feedback, refining the AI’s analysis and improving its accuracy over time. The image depicts a dynamic, collaborative process where AI provides the initial analysis and prioritization, while human analysts leverage their expertise to interpret the data, make critical decisions, and refine the AI’s learning process. The overall effect is a synergistic relationship where AI augments human capabilities, leading to a more effective and efficient cybersecurity response.

Ethical Considerations and Challenges of AI in Cybersecurity

The integration of artificial intelligence (AI) into cybersecurity offers immense potential, but it also introduces a new layer of ethical complexities and challenges. As AI systems become increasingly sophisticated and integral to our digital defenses, understanding and addressing these ethical concerns is paramount to ensuring responsible and effective deployment. Ignoring these issues could lead to unintended consequences, undermining the very security AI is meant to enhance.

AI-driven security systems, while powerful, are not immune to the biases present in the data they are trained on. This can lead to discriminatory outcomes, where certain groups or individuals are unfairly targeted or overlooked. For example, an AI system trained primarily on data from one geographic region might be less effective at detecting threats originating from other regions, potentially leaving those areas more vulnerable.

Potential Biases in AI-Driven Security Systems and Their Implications

Bias in AI security systems stems from the data used to train them. If the training data reflects existing societal biases, the AI will likely perpetuate and even amplify these biases. This can manifest in various ways, such as unfairly flagging users based on their location, ethnicity, or even online behavior patterns that are correlated with protected characteristics. The implications are significant, potentially leading to false positives, missed threats, and the erosion of trust in security systems. Consider a scenario where an AI system, trained on data skewed towards a specific type of malware, consistently misidentifies benign software from a particular developer as malicious, leading to unnecessary disruptions and damage to reputation. Addressing this requires careful curation of training data and ongoing monitoring for bias in AI outputs.

Challenges of Ensuring Explainability and Transparency of AI Algorithms in Security Applications

Many AI algorithms, particularly deep learning models, operate as “black boxes,” making it difficult to understand how they arrive at their conclusions. This lack of transparency poses significant challenges in cybersecurity, where accountability and trust are crucial. If an AI system flags a suspicious activity, but its reasoning is opaque, it’s difficult to verify its accuracy or take appropriate action. This “explainability gap” can hinder investigations, create legal hurdles, and ultimately undermine confidence in the AI’s effectiveness. The development of explainable AI (XAI) techniques is crucial to address this, enabling security professionals to understand and validate the decisions made by AI systems.

Risks Associated with the Misuse of AI in Cyberattacks

The same AI technologies used for defense can be weaponized by malicious actors. AI can automate the creation of sophisticated phishing emails, enhance the speed and effectiveness of malware attacks, and even enable the development of autonomous attack systems. This arms race presents a significant challenge, requiring continuous innovation in defensive AI technologies to stay ahead of these evolving threats. For instance, AI can be used to generate highly realistic deepfakes, making social engineering attacks more convincing and difficult to detect. This highlights the need for proactive measures to mitigate the risks associated with AI-powered cyberattacks, including international cooperation and the development of robust countermeasures.

Comparison of Benefits and Drawbacks of Using AI in Cybersecurity

AI offers significant benefits in cybersecurity, including improved threat detection, faster response times, and automated remediation. However, it also presents drawbacks, such as the potential for bias, the lack of transparency in some algorithms, and the risk of misuse by malicious actors. The effective use of AI in cybersecurity requires a careful balancing of these benefits and drawbacks, prioritizing ethical considerations and robust security practices. The development and implementation of ethical guidelines and regulatory frameworks are crucial to ensure responsible innovation and mitigate potential harms.

Ultimate Conclusion

Source: orangemantra.com

In a world where cyber threats are becoming increasingly complex and sophisticated, the role of AI in preventing cybersecurity breaches is undeniable. AI is no longer a futuristic concept but a critical component of a robust security strategy. By leveraging AI’s ability to analyze vast datasets, identify patterns, and automate responses, organizations can significantly enhance their defenses and reduce their vulnerability to attacks. While challenges remain, particularly around ethical considerations and the potential for bias, the benefits of AI in bolstering cybersecurity are clear and compelling. The future of cybersecurity is intelligent, proactive, and undeniably powered by AI.