The Role of AI in Enhancing Fraud Detection in Financial Services is no longer a futuristic fantasy; it’s the present reality reshaping how we fight financial crime. From sophisticated algorithms sniffing out anomalies to real-time systems thwarting fraudsters in their tracks, AI is revolutionizing the financial landscape. This deep dive explores the cutting-edge techniques, data challenges, and ethical considerations shaping this critical field.

We’ll unpack how machine learning, deep learning, and natural language processing are deployed to identify and prevent fraudulent activities. We’ll also delve into the crucial role of data – its collection, preprocessing, and the inherent privacy concerns. Finally, we’ll explore the future of AI in fraud detection, including emerging trends and the ethical dilemmas it presents.

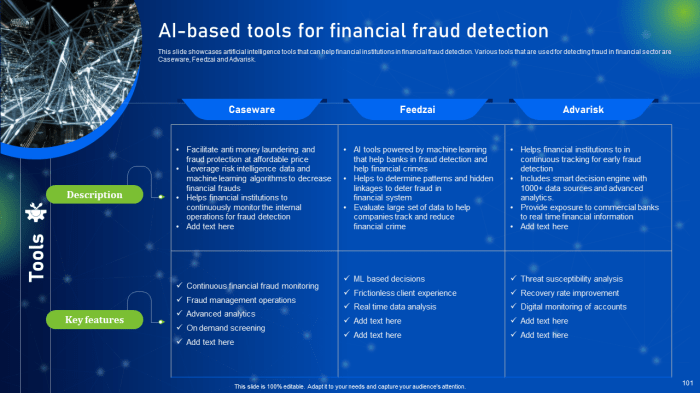

AI Techniques in Fraud Detection

Source: slideteam.net

AI’s role in spotting dodgy financial transactions is huge, flagging suspicious activity in real-time. This precision extends beyond finance; think about the leaps AI is making in personalized medicine, as explored in this insightful article on The Impact of Artificial Intelligence on Personalized Healthcare Plans. Ultimately, the ability of AI to analyze massive datasets and identify patterns is revolutionizing both fraud detection and healthcare, paving the way for more efficient and effective systems.

The financial services industry is constantly battling sophisticated fraud schemes. The sheer volume of transactions and the evolving nature of fraudulent activities make traditional methods increasingly ineffective. Artificial intelligence (AI) offers a powerful arsenal of tools to detect and prevent fraud, significantly improving accuracy and efficiency. This section delves into the specific AI techniques employed, highlighting their strengths and weaknesses.

AI Algorithms Used in Fraud Detection, The Role of AI in Enhancing Fraud Detection in Financial Services

Several AI algorithms are pivotal in enhancing fraud detection capabilities. These algorithms leverage different approaches to identify patterns and anomalies indicative of fraudulent behavior. The choice of algorithm often depends on the specific type of fraud being addressed and the nature of the available data.

| Algorithm | Strengths | Weaknesses | Typical Applications |

|---|---|---|---|

| Machine Learning (ML) – e.g., Logistic Regression, Random Forest, Support Vector Machines | Relatively simple to implement and interpret; can handle large datasets; good for identifying clear patterns. | Can struggle with complex, non-linear relationships; requires labeled data for supervised learning; performance can degrade with high-dimensional data. | Credit card fraud detection, identifying potentially fraudulent loan applications. |

| Deep Learning (DL) – e.g., Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs) | Excellent at identifying complex, non-linear patterns; can automatically learn features from raw data; high accuracy potential. | Requires substantial computational resources; difficult to interpret; needs large amounts of data for training; prone to overfitting if not carefully managed. | Detecting sophisticated money laundering schemes, analyzing unstructured data like transaction narratives. |

| Natural Language Processing (NLP) | Enables analysis of textual data like transaction descriptions, emails, and chat logs; can uncover hidden patterns and suspicious communication. | Requires significant data preprocessing; can be sensitive to language nuances and slang; accuracy depends heavily on the quality of the training data. | Identifying fraudulent claims based on textual descriptions; detecting phishing attempts through email analysis. |

Unsupervised Learning in Fraud Detection

Unsupervised learning excels at identifying anomalies and outliers within transaction data, even without pre-labeled examples of fraudulent activity. This is crucial because fraudsters constantly develop new methods, making it difficult to create comprehensive labeled datasets.

Unsupervised learning techniques commonly used include clustering algorithms (like k-means) and anomaly detection methods (like Isolation Forest). For example, k-means can group similar transactions together, highlighting those that deviate significantly from established clusters as potential fraud. Isolation Forest, on the other hand, identifies anomalies by isolating them in a tree-based structure; transactions that are easily isolated are more likely to be fraudulent. These techniques help flag suspicious transactions for further human review.

Supervised Learning in Fraud Detection

Supervised learning algorithms require labeled datasets where each transaction is classified as either fraudulent or legitimate. This allows the algorithm to learn the characteristics that distinguish fraudulent transactions from legitimate ones. Commonly used supervised learning methods include logistic regression, support vector machines, and decision trees.

The quality of the training data is paramount. A well-balanced dataset, with a roughly equal representation of fraudulent and legitimate transactions, is ideal. However, this is often challenging to achieve in fraud detection, as fraudulent transactions are typically far less frequent than legitimate ones. This class imbalance can lead to biased models that perform poorly on the minority class (fraudulent transactions). Techniques like oversampling the minority class, undersampling the majority class, or using cost-sensitive learning can mitigate this problem. For instance, assigning a higher penalty to misclassifying a fraudulent transaction can help the model prioritize its detection.

Data Sources and Preprocessing

Building a robust AI-powered fraud detection system hinges on the quality and diversity of the data it uses. Think of it like baking a cake – you need the right ingredients in the right proportions to get a delicious result. Similarly, the accuracy and effectiveness of fraud detection depend heavily on the data fed into the AI model, and how that data is prepared. This section delves into the various data sources and the crucial preprocessing steps involved.

AI-powered fraud detection systems rely on a rich tapestry of data to identify suspicious activities. These data sources provide a comprehensive view of customer behavior and transactions, allowing the AI to learn patterns and anomalies indicative of fraud.

Types of Data Used in AI-Powered Fraud Detection

The effectiveness of AI in fraud detection is directly proportional to the richness and variety of the data used. A diverse dataset provides a more nuanced understanding of fraudulent activities, enabling more accurate predictions. Here’s a breakdown of the key data types:

- Transactional Data: This forms the backbone of fraud detection. It includes details about every transaction, such as the amount, date, time, location, merchant, and payment method. Analyzing patterns in these transactions – for example, unusually large transactions or multiple transactions from the same account in a short period – can highlight suspicious activity. Imagine a customer who usually makes small purchases suddenly making a large international transfer – that’s a red flag.

- Customer Data: This encompasses information about the customer themselves, including their demographics (age, location, occupation), account history (length of time as a customer, previous transactions), and contact information. This data helps establish a baseline of normal behavior for each customer, making it easier to spot deviations that might indicate fraud.

- Behavioral Data: This is arguably the most dynamic and insightful data type. It captures how customers interact with the financial services platform, including login attempts, device information, IP addresses, and browsing history. Unusual login patterns, such as multiple failed login attempts from different locations, can be strong indicators of unauthorized access. Consider a customer suddenly accessing their account from a new device in a different country – that warrants closer inspection.

Data Preprocessing for Fraud Detection

Raw data, as collected, is rarely suitable for direct use in AI models. It often contains inconsistencies, missing values, and irrelevant information that can hinder the model’s performance. Therefore, a rigorous preprocessing pipeline is essential to ensure data quality and model accuracy. This involves several key steps:

- Data Cleaning: This initial step focuses on identifying and handling missing values, outliers, and inconsistencies in the data. Missing values might be imputed using techniques like mean/median imputation or more sophisticated methods like k-Nearest Neighbors. Outliers, which are data points significantly different from the rest, can be removed or transformed. Inconsistent data, such as variations in date formats, need to be standardized.

- Data Transformation: This involves converting data into a format suitable for the AI model. This might include scaling numerical features (e.g., using standardization or normalization), encoding categorical features (e.g., using one-hot encoding or label encoding), and handling skewed data (e.g., using logarithmic transformations). For instance, transaction amounts often follow a skewed distribution, and logarithmic transformation can help normalize it.

- Feature Engineering: This is arguably the most creative and impactful step. It involves creating new features from existing ones that might be more informative for the AI model. For example, new features could be created by combining transaction amounts and frequencies, calculating the time elapsed between transactions, or creating interaction terms between different features. This step often requires domain expertise and iterative experimentation.

Challenges in Data Privacy and Security

Leveraging AI for fraud detection involves handling sensitive customer data, raising significant privacy and security concerns. Striking a balance between leveraging data’s power and protecting customer privacy is crucial. This requires careful consideration and implementation of robust security measures.

- Data anonymization and pseudonymization: Techniques like differential privacy and federated learning can be employed to minimize the risk of identifying individuals while still allowing for effective fraud detection. Differential privacy adds carefully calibrated noise to the data, preventing precise reconstruction of individual records. Federated learning trains models on decentralized data without directly sharing the data itself.

- Access control and encryption: Restricting access to sensitive data to authorized personnel only and encrypting data both at rest and in transit are fundamental security measures. Strong encryption algorithms and secure key management practices are crucial.

- Compliance with data privacy regulations: Adherence to regulations like GDPR, CCPA, and other relevant laws is paramount. This includes obtaining informed consent, providing transparency about data usage, and ensuring data security.

Real-Time Fraud Detection and Prevention

Real-time fraud detection is no longer a futuristic concept; it’s a necessity in today’s fast-paced digital financial landscape. AI’s ability to process vast amounts of data instantaneously makes it the perfect tool to identify and prevent fraudulent activities as they happen, minimizing financial losses and protecting customers. This section delves into how AI systems are integrated into real-time transaction processing, the various approaches to fraud scoring, and the crucial role of explainable AI in building trust and transparency.

AI systems can be seamlessly integrated into real-time transaction processing systems through a sophisticated architecture. This integration allows for immediate analysis of transaction data, flagging suspicious activities, and even automatically blocking fraudulent transactions.

System Architecture for Real-Time Fraud Detection

Imagine a system where every transaction triggers a cascade of AI-powered checks. This system architecture involves several key components working in concert. First, the transaction data – including amount, location, time, customer history, and device information – is fed into a real-time data ingestion pipeline. This pipeline preprocesses the data, making it suitable for AI model consumption. Then, this data flows into a deployed AI model (often a neural network or ensemble of models) specifically trained to detect fraudulent patterns. The model generates a fraud score, essentially a probability of the transaction being fraudulent. A decision engine, based on predefined thresholds and business rules, uses this score to decide whether to approve, flag, or block the transaction. Finally, a notification system alerts relevant personnel about flagged transactions, allowing for human review and investigation. This system can be visualized as a series of interconnected modules: data ingestion, data preprocessing, AI model, decision engine, and notification system. Each module plays a crucial role in ensuring the system’s efficiency and accuracy. For example, a high-fraud score might trigger an immediate block, while a lower score might result in a flag for manual review. The entire process happens in milliseconds, ensuring minimal disruption to legitimate transactions.

Real-Time Fraud Scoring and Decision-Making Approaches

Several approaches exist for real-time fraud scoring and decision-making. Rule-based systems, though simple, are often inflexible and struggle to adapt to evolving fraud tactics. Machine learning models, on the other hand, can dynamically adapt and learn from new data, offering greater accuracy and adaptability. Hybrid approaches, combining rule-based systems with machine learning, leverage the strengths of both, providing a robust and flexible solution. For instance, a hybrid system might use rule-based systems for quick identification of clear-cut fraudulent activities and machine learning models for more nuanced, complex scenarios. The choice of approach depends on factors such as the complexity of the fraud landscape, the volume of transactions, and the desired level of automation. Consider a scenario where a sudden surge in transactions from a specific geographic location is detected. A rule-based system might immediately flag these transactions, while a machine learning model might analyze additional factors such as transaction amounts and customer behavior to refine the assessment.

Explainable AI (XAI) in Real-Time Fraud Detection

Transparency and trust are paramount in financial services. Explainable AI (XAI) addresses this by providing insights into how AI models arrive at their decisions. In real-time fraud detection, XAI can explain why a particular transaction was flagged as fraudulent, providing details such as the specific features that triggered the alert and their relative importance. For example, an XAI system might explain that a transaction was flagged due to an unusual transaction amount combined with a recent change in the customer’s IP address and device. This level of transparency helps build trust with customers and regulators, reduces the risk of false positives, and facilitates effective investigation by human analysts. It also helps in model debugging and improvement, allowing developers to identify and address biases or inaccuracies in the model’s predictions. Imagine a scenario where a legitimate transaction is wrongly flagged. XAI can provide a detailed explanation, helping to refine the model and prevent similar incidents in the future.

AI and Regulatory Compliance: The Role Of AI In Enhancing Fraud Detection In Financial Services

The rise of AI in financial fraud detection presents a fascinating paradox: while it offers unparalleled power to combat illicit activities, it also introduces a complex web of regulatory hurdles. Navigating this landscape requires a keen understanding of the evolving legal framework and a proactive approach to ensuring compliance. Financial institutions must not only leverage AI’s potential but also demonstrate their commitment to responsible and ethical AI deployment.

AI’s integration into fraud detection systems necessitates careful consideration of existing regulations and emerging guidelines. This ensures not only legal compliance but also fosters trust and maintains the integrity of the financial system. Failure to comply can result in significant penalties and reputational damage, highlighting the importance of a proactive and comprehensive approach.

Regulatory Challenges and Compliance Requirements

The use of AI in financial services for fraud detection is subject to a variety of regulations, depending on the jurisdiction and specific application. These regulations often overlap and can be complex to navigate. Understanding these requirements is crucial for successful and compliant AI implementation.

- Data Privacy Regulations: AI systems often rely on vast amounts of personal data, necessitating strict adherence to regulations like GDPR (in Europe) and CCPA (in California). This includes obtaining explicit consent, ensuring data security, and providing individuals with control over their data.

- Fair Lending Laws: AI algorithms must not discriminate against protected groups. Regulations like the Equal Credit Opportunity Act (ECOA) in the US mandate fair and unbiased lending practices. AI models need to be rigorously tested for bias to ensure compliance.

- Anti-Money Laundering (AML) and Know Your Customer (KYC) Regulations: Financial institutions are obligated to comply with AML/KYC regulations, which aim to prevent money laundering and terrorist financing. AI can assist in this process, but its application must align with these regulatory frameworks. For instance, AI-driven transaction monitoring systems must be designed to identify suspicious activities while respecting individual privacy.

- Model Risk Management: Regulators are increasingly focused on the risks associated with AI models, including model accuracy, explainability, and robustness. Robust model risk management frameworks are essential to ensure the reliability and trustworthiness of AI-driven fraud detection systems.

AI’s Role in Meeting Regulatory Obligations

AI can be a powerful tool for financial institutions to meet their regulatory obligations. Its ability to process vast datasets and identify complex patterns makes it highly effective in fraud prevention and detection.

For example, AI-powered systems can automate KYC/AML checks, flagging potentially suspicious transactions in real-time. This allows institutions to comply with regulatory requirements more efficiently and effectively than traditional methods. Furthermore, AI can assist in generating audit trails and providing explanations for decisions, improving transparency and facilitating regulatory scrutiny. The ability to monitor and assess the fairness and accuracy of AI models also aids in fulfilling compliance requirements.

Best Practices for Ensuring Fairness, Transparency, and Accountability

To ensure the responsible and ethical use of AI in fraud detection, financial institutions should adopt best practices that promote fairness, transparency, and accountability.

- Bias Detection and Mitigation: Regularly assess AI models for bias and implement mitigation strategies to ensure fair and equitable outcomes. This involves careful data selection, algorithm design, and ongoing monitoring.

- Explainable AI (XAI): Employ XAI techniques to make AI decisions more transparent and understandable. This helps build trust and facilitates regulatory oversight. For instance, providing clear explanations for why a transaction was flagged as suspicious is crucial.

- Human Oversight and Control: Maintain human oversight of AI systems to ensure responsible use and prevent unintended consequences. Human intervention should be readily available to review and override AI decisions when necessary.

- Robust Model Validation and Monitoring: Implement rigorous processes for validating and monitoring AI models to ensure accuracy, stability, and compliance with regulatory requirements. This involves continuous testing, retraining, and performance evaluation.

- Documentation and Record Keeping: Maintain detailed documentation of AI model development, deployment, and performance. This is essential for demonstrating compliance and facilitating audits.

Future Trends and Challenges

The world of AI-powered fraud detection is constantly evolving, a dynamic dance between innovative technology and ever-adapting fraudsters. While AI offers powerful tools to combat financial crime, the future holds both exciting advancements and significant hurdles to overcome. Understanding these trends and challenges is crucial for financial institutions to stay ahead of the curve and maintain the integrity of their systems.

AI’s role in fraud detection is poised for a significant leap forward, fueled by emerging technologies and a deeper understanding of its capabilities and limitations. The ongoing challenge lies not just in detecting known fraud patterns, but in anticipating and proactively addressing the innovative methods employed by criminals.

Advanced Analytics and Blockchain Technology

Advanced analytics, including machine learning techniques like deep learning and natural language processing, are pushing the boundaries of fraud detection. Deep learning models, for instance, can analyze complex datasets to identify subtle patterns indicative of fraudulent activity that traditional rule-based systems might miss. Imagine a system that can analyze not just transaction amounts and locations, but also the user’s typing speed, device information, and even the emotional tone of their communication – all contributing to a more holistic risk assessment. Blockchain technology, with its immutable ledger, offers a secure and transparent environment for tracking transactions, making it harder for fraudsters to manipulate data and potentially reducing the overall risk of fraud. This enhanced transparency can streamline investigations and provide a more auditable trail for regulatory compliance. For example, tracking the provenance of digital assets using blockchain could significantly reduce the risk of money laundering and other financial crimes.

Adaptability to Evolving Fraud Techniques

Fraudsters are constantly evolving their tactics, making the adaptation of AI models a critical ongoing challenge. They are becoming increasingly sophisticated, leveraging techniques like synthetic identity theft (creating fake identities using real data fragments), deepfakes (manipulated videos and audio recordings used for social engineering), and exploiting vulnerabilities in AI algorithms themselves. For instance, a fraudster might try to “poison” an AI model by submitting carefully crafted fraudulent transactions that appear legitimate, thereby skewing the model’s ability to accurately identify future fraudulent activity. Another example involves using bots to generate a large volume of seemingly legitimate transactions to overwhelm the system and obscure actual fraudulent activity. To counter this, financial institutions need to invest in robust model monitoring and retraining mechanisms, incorporating techniques like adversarial training to enhance the resilience of their AI systems.

Ethical Implications of AI in Fraud Detection

The use of AI in fraud detection raises significant ethical considerations. Bias in training data can lead to discriminatory outcomes, disproportionately affecting certain demographic groups. For example, an AI model trained on data predominantly from one region or socioeconomic background might incorrectly flag legitimate transactions from individuals belonging to other groups. Furthermore, the use of AI for surveillance and profiling raises concerns about privacy and data security. Responsible AI development and deployment requires careful consideration of these ethical implications, including the implementation of fairness audits, transparency mechanisms, and robust data protection measures. Striking a balance between preventing fraud and protecting individual rights is paramount. This requires a multi-faceted approach encompassing rigorous testing, ethical guidelines, and ongoing monitoring of AI systems to ensure their fairness and accountability.

Outcome Summary

Source: amazonaws.com

The integration of AI in fraud detection within financial services isn’t just about technological advancement; it’s about safeguarding our financial systems and building a more secure future. While challenges remain – from adapting to evolving fraud tactics to addressing ethical concerns – the potential benefits are undeniable. AI offers a powerful arsenal in the ongoing battle against financial crime, continuously learning and adapting to stay ahead of the curve. The future of finance is secure, thanks to the intelligent evolution of fraud detection.