The Potential of Artificial Intelligence in Preventing Cyberattacks: Forget clunky antivirus software – the future of cybersecurity is here, and it’s powered by AI. Imagine a world where cyber threats are identified and neutralized before they even reach your system. That’s the promise of AI, a game-changer that’s rapidly transforming how we defend against the ever-evolving landscape of digital attacks. This isn’t just about detecting malware; it’s about predicting, preventing, and proactively securing our digital lives.

From AI-powered threat detection systems that learn and adapt to new threats in real-time to automated vulnerability management and AI-enhanced SIEM solutions, the possibilities are vast. We’ll dive deep into how AI analyzes massive datasets to spot anomalies, predicts potential attacks, and even helps build more secure software from the ground up. We’ll also explore the ethical considerations and challenges that come with this powerful technology, ensuring a balanced and insightful look at this crucial area of cybersecurity.

AI-Powered Threat Detection and Prevention

The rise of sophisticated cyberattacks necessitates equally advanced defense mechanisms. Artificial intelligence (AI), particularly machine learning, offers a powerful solution by enabling proactive threat detection and prevention capabilities far exceeding traditional methods. Its ability to analyze vast amounts of data in real-time allows for the identification of subtle anomalies and patterns indicative of malicious activity, significantly improving cybersecurity posture.

Machine Learning for Real-Time Malicious Activity Identification

Machine learning algorithms, specifically those employing supervised and unsupervised learning techniques, are crucial for real-time threat detection. Supervised learning uses labeled datasets of known malicious and benign activities to train models capable of classifying new events. Unsupervised learning, on the other hand, identifies patterns and anomalies in unlabeled data, uncovering previously unseen threats. These algorithms analyze network traffic, system logs, and user behavior to identify deviations from established baselines, flagging suspicious activities for immediate investigation. For instance, a model trained on known phishing email characteristics can swiftly identify and block similar attempts, even if the specific content varies slightly.

AI’s Role in Detecting Zero-Day Exploits and Unknown Threats

Zero-day exploits, by their nature, lack established signatures, posing a significant challenge to traditional security systems. AI excels in addressing this by employing anomaly detection techniques. By establishing a baseline of normal system behavior, AI algorithms can identify deviations that signal a potential zero-day attack. This is achieved through analyzing network traffic patterns, system call sequences, and memory access patterns, looking for unusual spikes or unexpected interactions. For example, an AI system might detect unusual outbound connections from a normally quiet server, indicating a potential compromise and subsequent exfiltration of data. Furthermore, AI can leverage techniques like reinforcement learning to adapt and evolve its detection capabilities, constantly refining its understanding of normal and abnormal behavior.

Comparison of AI-Based Intrusion Detection Systems

Several AI-based intrusion detection systems (IDS) exist, each with varying strengths and weaknesses. Signature-based IDS rely on known attack patterns, while anomaly-based IDS focus on detecting deviations from normal behavior. Hybrid approaches combine both methods for a more comprehensive solution. For example, some systems use deep learning models to analyze network traffic, while others employ machine learning algorithms to analyze log files. The effectiveness of each system depends on factors such as the quality of the training data, the complexity of the algorithms, and the specific environment in which it is deployed. The choice of the optimal system is dependent on the specific needs and resources of the organization.

AI-Driven Anomaly Detection System for Network Traffic

An effective AI-driven anomaly detection system for network traffic would incorporate several key features. First, it would employ a multi-layered approach, combining statistical methods with machine learning algorithms for robust detection. Secondly, it would incorporate real-time data analysis capabilities, enabling immediate response to detected anomalies. Thirdly, it would be capable of automatically adapting to changing network conditions and evolving attack patterns, leveraging reinforcement learning techniques. Finally, it would provide clear and actionable alerts, minimizing false positives and prioritizing critical threats. Such a system could analyze network flow data, identifying unusual traffic patterns, such as unexpected high volume of data transfer to an external IP address or unusual communication patterns between internal systems.

Predicting Potential Cyberattacks Using AI

AI’s ability to analyze massive datasets allows for the prediction of potential cyberattacks. By correlating various data sources, such as threat intelligence feeds, network traffic patterns, and vulnerability scans, AI algorithms can identify emerging threats and predict likely targets. For example, an AI system might detect a surge in exploitation attempts targeting a specific vulnerability in a widely used software, predicting a potential wave of attacks targeting organizations using that software. This predictive capability enables proactive security measures, such as patching vulnerable systems or implementing additional security controls before an attack occurs. Such proactive measures can significantly mitigate the impact of successful attacks.

AI in Vulnerability Management

The digital landscape is a minefield of vulnerabilities, constantly evolving and expanding. Traditional vulnerability management struggles to keep pace with this relentless onslaught. Enter artificial intelligence (AI), offering a powerful new arsenal to identify, prioritize, and mitigate these threats with unprecedented speed and accuracy. AI’s ability to analyze vast datasets, identify patterns, and learn from past experiences makes it an invaluable asset in the fight against cyberattacks.

AI significantly enhances vulnerability management by automating previously manual and time-consuming tasks. This automation frees up security professionals to focus on more strategic initiatives, improving overall security posture and reducing the risk of successful breaches. By leveraging machine learning algorithms, AI systems can analyze network traffic, system logs, and application code to detect weaknesses far more efficiently than human analysts alone.

Automated Vulnerability Scanning and Assessment

AI-powered vulnerability scanners surpass traditional tools by analyzing codebases and configurations for subtle flaws often missed by rule-based systems. They can detect zero-day vulnerabilities – previously unknown exploits – by identifying unusual patterns in network traffic or system behavior. This proactive approach significantly improves the speed and accuracy of vulnerability discovery, providing a more comprehensive understanding of an organization’s security posture. For example, an AI system might identify a previously unknown vulnerability in a specific piece of software by recognizing a pattern of unusual network requests originating from a particular user account, even before a public exploit is available.

Prioritization of Vulnerabilities Based on Severity and Potential Impact

AI algorithms can effectively prioritize vulnerabilities based on a range of factors, including their severity (CVSS score), the likelihood of exploitation, and the potential impact on the organization. This prioritization ensures that security teams focus their efforts on the most critical threats first. A well-trained AI model could, for example, prioritize a vulnerability in a critical system with high exposure over a less severe vulnerability in a less critical system, even if the latter has a higher CVSS score. This risk-based approach optimizes resource allocation and enhances overall security effectiveness.

AI in Patching Vulnerabilities and Risk Mitigation

AI can not only identify vulnerabilities but also assist in patching them and mitigating the associated risks. AI systems can automate the patching process, ensuring that systems are updated with the latest security patches promptly. Moreover, AI can suggest optimal mitigation strategies based on the specific vulnerability and the organization’s unique infrastructure. For instance, if a vulnerability is found in a specific application, AI could recommend implementing a web application firewall (WAF) or deploying intrusion detection/prevention systems (IDS/IPS) to mitigate the risk. This proactive approach minimizes the window of vulnerability and reduces the chances of successful exploitation.

Integrating AI into a Vulnerability Management Program

Integrating AI into a vulnerability management program requires a phased approach.

- Assessment and Planning: Evaluate current vulnerability management processes and identify areas where AI can add value. Define clear objectives and metrics for success.

- Selection and Implementation: Choose AI-powered vulnerability management tools that align with the organization’s needs and integrate them into existing security infrastructure.

- Data Integration and Training: Gather and prepare relevant data for AI model training, ensuring data quality and consistency. Train the AI models using historical vulnerability data and security logs.

- Monitoring and Refinement: Continuously monitor the AI system’s performance, analyze its outputs, and refine the models based on new data and insights. This iterative process is crucial for maintaining accuracy and effectiveness.

- Team Training and Collaboration: Train security teams on how to use and interpret the AI system’s outputs, fostering collaboration between humans and machines.

Challenges and Limitations of Using AI for Vulnerability Management

While AI offers significant advantages, challenges remain. The accuracy of AI models depends heavily on the quality and quantity of training data. Biased or incomplete data can lead to inaccurate predictions and missed vulnerabilities. Furthermore, AI systems can be computationally expensive and require significant infrastructure investment. Finally, the “black box” nature of some AI algorithms can make it difficult to understand how they arrive at their conclusions, potentially hindering trust and adoption.

AI for Security Information and Event Management (SIEM)

Traditional SIEM systems have struggled to keep pace with the ever-increasing volume and complexity of security data. Enter AI, offering a powerful solution to enhance threat detection and response capabilities within SIEM platforms. AI-powered SIEM solutions leverage machine learning and other advanced techniques to analyze vast amounts of security logs, identify anomalies, and prioritize alerts far more effectively than their predecessors. This leads to faster incident response, reduced dwell time for attackers, and a significant improvement in overall security posture.

Comparison of Traditional and AI-Enhanced SIEM Systems

The following table highlights the key differences between traditional SIEM systems and those enhanced with AI capabilities:

| Feature | Traditional SIEM | AI-Enhanced SIEM |

|---|---|---|

| Log Analysis | Relies heavily on predefined rules and signatures, often missing subtle anomalies. Limited scalability with large datasets. | Utilizes machine learning algorithms to identify patterns and anomalies in log data, regardless of pre-defined rules. Scales efficiently to handle massive datasets. |

| Alerting | Generates numerous false positives, requiring significant manual investigation. Prioritization is often based on simple rules. | Reduces false positives significantly through anomaly detection and advanced correlation. Prioritizes alerts based on risk scores and likelihood of malicious activity. |

| Threat Detection | Detects known threats based on pre-defined signatures. Struggles with sophisticated, zero-day attacks. | Identifies both known and unknown threats through anomaly detection and behavioral analysis. Adapts to new attack techniques more effectively. |

| Incident Response | Requires manual investigation and remediation, which can be time-consuming and resource-intensive. | Automates incident response and remediation through workflows triggered by AI-driven alerts. Provides automated containment and recovery actions. |

AI’s Impact on Security Log Analysis Accuracy and Efficiency

AI significantly improves the accuracy and efficiency of security log analysis by automating the identification of patterns and anomalies that would be missed by human analysts or traditional rule-based systems. Machine learning algorithms can analyze vast amounts of data in real-time, identifying subtle correlations and deviations from normal behavior that indicate potential threats. This leads to faster detection of security incidents and reduces the time spent on manual investigation. For example, AI can detect unusual login attempts from unfamiliar geographic locations or identify subtle changes in system behavior that might indicate malware infection, far exceeding the capabilities of traditional rule-based systems.

AI-Driven Correlation of Security Events

AI excels at correlating security events from diverse sources, such as firewalls, intrusion detection systems, and endpoint security agents, to identify complex attacks. Traditional SIEM systems often struggle with this task due to the sheer volume and complexity of data. AI algorithms can analyze these disparate data streams, identify relationships between seemingly unrelated events, and construct a comprehensive picture of an attack. This allows security teams to understand the full scope of an attack and respond more effectively. For instance, AI might correlate a suspicious email with a subsequent malware infection and a compromised server, revealing a sophisticated phishing attack.

AI-Powered Automated Incident Response and Remediation

AI facilitates automated incident response and remediation by triggering pre-defined workflows based on AI-driven alerts. This automation reduces the time it takes to contain and remediate security incidents, minimizing the impact on the organization. For example, an AI-powered SIEM system could automatically quarantine a compromised endpoint upon detecting malicious activity, preventing further spread of malware. It could also automatically reset compromised user passwords and initiate other remediation actions based on predefined playbooks.

AI-Powered SIEM Features for Reducing False Positives and Improving Alert Prioritization

AI-powered SIEM solutions incorporate features that significantly reduce false positives and improve alert prioritization. These features include anomaly detection, behavioral analysis, and risk scoring. Anomaly detection identifies deviations from established baselines, flagging potentially malicious activity. Behavioral analysis monitors user and system activity, identifying unusual patterns that might indicate a threat. Risk scoring assigns a priority level to each alert based on the severity and likelihood of the threat, enabling security teams to focus on the most critical issues first. This significantly improves the efficiency of security operations and reduces the burden on security analysts.

AI in Cybersecurity Training and Awareness

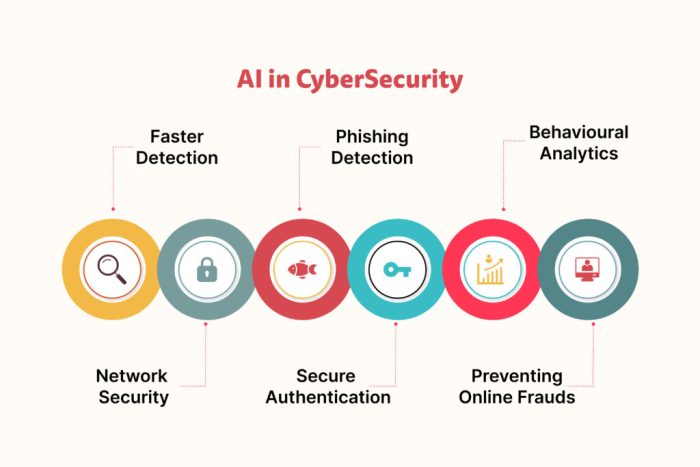

Source: orangemantra.com

AI’s role in cybersecurity is exploding, offering proactive defenses against increasingly sophisticated attacks. Understanding system vulnerabilities is key, and that’s where the magic of digital twins comes in; check out this article on How Digital Twins Are Changing the Way Industries Manage Operations to see how they’re revolutionizing predictive maintenance and risk assessment. By simulating real-world scenarios, AI paired with digital twins can preemptively identify and neutralize potential cyber threats before they even materialize.

Cybersecurity threats are constantly evolving, making traditional training methods increasingly ineffective. AI offers a powerful solution, enabling the creation of dynamic, personalized, and highly effective cybersecurity training programs that adapt to the ever-changing landscape of threats and the unique needs of individual employees. By leveraging AI’s capabilities, organizations can significantly improve their cybersecurity posture and reduce the risk of human error, a major factor in many breaches.

AI’s role in cybersecurity training extends beyond simply delivering information; it provides a mechanism for creating engaging and realistic simulations, personalized learning paths, and continuous evaluation of training effectiveness. This allows for a proactive and adaptive approach to cybersecurity awareness, ensuring employees are equipped to handle the latest threats.

AI-Powered Cybersecurity Training Simulation Platform

An AI-powered platform for cybersecurity training could simulate a range of realistic cyberattack scenarios, from phishing emails and malware infections to more sophisticated attacks like social engineering and denial-of-service attempts. The platform would dynamically adjust the difficulty and complexity of the simulations based on the user’s performance and learning progress. For instance, a user who consistently fails to identify phishing attempts might be presented with increasingly sophisticated phishing emails, incorporating advanced social engineering techniques. Conversely, a user who demonstrates proficiency in identifying phishing attempts might be challenged with more complex scenarios involving malware analysis or network intrusion detection. The platform would also provide detailed feedback on user actions, explaining the consequences of their decisions and highlighting areas for improvement. This feedback loop is crucial for reinforcing learning and improving decision-making skills under pressure.

Interactive Cybersecurity Training Modules

A comprehensive AI-powered training program would include several interactive modules focusing on key areas of cybersecurity best practices. These modules could incorporate gamification elements, such as points, badges, and leaderboards, to increase engagement and motivation.

Examples of interactive modules could include:

- Phishing Awareness: This module would present users with a series of emails and websites, some legitimate and some malicious, requiring them to identify the phishing attempts. AI could analyze user responses to identify patterns and weaknesses in their ability to detect phishing attacks, tailoring subsequent training to address those weaknesses.

- Password Management: This module would teach users best practices for creating and managing strong passwords, including the use of password managers and multi-factor authentication. The AI could provide personalized feedback on password strength and offer suggestions for improvement.

- Data Security: This module would cover topics such as data classification, access control, and data encryption. AI could simulate real-world scenarios, such as data breaches, and guide users through the appropriate response procedures.

- Social Engineering Awareness: This module would educate users on various social engineering techniques used by attackers and provide strategies for identifying and avoiding these attacks. The AI could present realistic scenarios, such as phone calls or emails from fraudulent individuals, and guide users on how to respond appropriately.

Personalized Cybersecurity Training Based on User Needs

AI can personalize cybersecurity training by analyzing individual user performance data and adapting the training content and difficulty accordingly. This ensures that each employee receives training tailored to their specific needs and skill levels. For example, a user who consistently struggles with identifying malicious websites might receive additional training on identifying suspicious URLs and website security indicators, while a user who demonstrates a strong understanding of these concepts might be challenged with more advanced scenarios involving malware analysis or network intrusion detection. This personalized approach maximizes the effectiveness of the training and ensures that employees are adequately prepared to handle the cybersecurity challenges they are most likely to face.

AI in Identifying and Addressing Human Error

Human error is a significant factor in many cyberattacks. AI can help identify patterns of human error within an organization by analyzing security logs, incident reports, and user behavior data. This analysis can reveal common mistakes, such as clicking on malicious links or failing to follow security protocols. Once these patterns are identified, AI can be used to create targeted training modules to address these specific weaknesses. For example, if the analysis reveals a high rate of employees falling victim to phishing attacks, the AI can create a personalized training module focused on improving phishing awareness and detection skills for those specific employees.

AI-Driven Evaluation of Cybersecurity Training Effectiveness

AI can be used to evaluate the effectiveness of cybersecurity training programs by analyzing various metrics, including the number of successful phishing attempts, the number of security incidents, and user performance on training simulations. This data can be used to identify areas where the training is effective and areas where improvements are needed. For example, if the analysis reveals a high rate of successful phishing attempts following a training program, it indicates that the training needs to be revised or improved. AI can also identify correlations between specific training modules and improvements in user behavior, helping to optimize the training curriculum and ensure that it addresses the most critical vulnerabilities.

Ethical Considerations and Challenges: The Potential Of Artificial Intelligence In Preventing Cyberattacks

The rise of AI in cybersecurity presents a double-edged sword. While offering unprecedented protection against sophisticated threats, it also introduces a complex web of ethical dilemmas and practical challenges that demand careful consideration. Ignoring these issues risks exacerbating existing inequalities and creating new vulnerabilities. We need to ensure that AI enhances, not undermines, cybersecurity’s core principles of fairness, transparency, and accountability.

AI algorithms, like their human creators, are not immune to bias. Data used to train these algorithms often reflects existing societal biases, leading to discriminatory outcomes. For example, an AI system trained on data predominantly featuring male programmers might be less effective at detecting threats originating from female programmers, creating blind spots in security. This is not a hypothetical problem; it’s a real-world concern requiring proactive mitigation strategies.

Potential Biases in AI Algorithms and Their Impact on Cybersecurity, The Potential of Artificial Intelligence in Preventing Cyberattacks

Bias in AI algorithms can manifest in several ways, impacting cybersecurity effectiveness. For instance, an AI system trained primarily on data from one geographic region might be less adept at identifying threats originating from another. Similarly, if the training data overrepresents certain types of attacks, the system might prioritize those threats while neglecting others, creating vulnerabilities. This necessitates careful curation and diversification of training datasets, alongside ongoing monitoring and auditing of AI systems to detect and correct for bias. Regular retraining with updated, diverse datasets is crucial to maintain accuracy and fairness. Imagine a system trained primarily on phishing emails targeting financial institutions; it might miss more subtle phishing attempts targeting other sectors.

Ethical Implications of Using AI for Surveillance and Monitoring Employee Activity

The use of AI for employee monitoring raises significant ethical concerns. While AI can help detect insider threats, its deployment must be carefully balanced against employee privacy rights. The potential for misuse, leading to unwarranted surveillance and potential discrimination, is a serious consideration. Transparency is paramount; employees should be informed about the types of monitoring in place and the data being collected. Clear guidelines and robust oversight mechanisms are essential to prevent abuses of power and maintain trust. For instance, implementing data minimization principles – collecting only the data absolutely necessary for security – can mitigate privacy concerns. Furthermore, regular audits and independent reviews of AI-powered monitoring systems can help ensure ethical compliance.

Legal and Regulatory Challenges Associated with AI in Cybersecurity

The rapid advancement of AI in cybersecurity outpaces the development of relevant legal and regulatory frameworks. This creates uncertainty for organizations and individuals alike. Questions around liability in case of AI-related security breaches, data privacy regulations concerning AI-processed data, and the appropriate level of human oversight needed for AI-driven security systems remain largely unanswered. Harmonizing international regulations is also a significant challenge. The absence of clear legal guidelines can hinder innovation and create a climate of uncertainty, discouraging the adoption of potentially beneficial AI-powered security solutions. A collaborative effort involving policymakers, industry experts, and legal scholars is needed to establish a robust and adaptable regulatory landscape.

Measures to Ensure Transparency and Accountability in AI-Driven Security Systems

Transparency and accountability are crucial for building trust in AI-driven security systems. This necessitates clear documentation of the algorithms used, the data sources, and the decision-making processes. Organizations should implement mechanisms for auditing and reviewing AI systems to detect biases and ensure their effectiveness. Furthermore, establishing clear lines of responsibility for AI-related security incidents is crucial. This includes developing processes for investigating breaches and determining accountability for any failures. Regularly publishing transparency reports detailing the performance and limitations of AI systems can foster trust and accountability. These reports could include metrics such as false positive and false negative rates, as well as information about the datasets used to train the AI.

Mitigation of Risks Associated with AI-Powered Cyberattacks

AI is not only a tool for defense; it can also be weaponized for offensive purposes. AI-powered cyberattacks can be more sophisticated, adaptive, and difficult to detect than traditional attacks. Mitigation strategies include developing AI systems capable of detecting and responding to AI-driven attacks, investing in robust cybersecurity infrastructure, and fostering collaboration among researchers and security professionals to share threat intelligence and develop countermeasures. For example, developing AI systems that can identify anomalies in network traffic patterns indicative of AI-driven attacks, or creating honeypots designed to attract and analyze AI-powered attacks, are crucial steps in mitigating this risk. Regular security audits and penetration testing, incorporating AI-powered attack simulations, can also help organizations identify and address vulnerabilities before they are exploited.

AI for Secure Software Development

The software development lifecycle (SDLC) is increasingly vulnerable to sophisticated cyberattacks. Traditional security measures often struggle to keep pace with the evolving threat landscape. This is where Artificial Intelligence steps in, offering a powerful new arsenal for building more secure software from the ground up. AI’s ability to analyze vast amounts of data and identify patterns allows it to detect vulnerabilities that might otherwise go unnoticed, significantly improving the overall security posture of applications.

AI can be instrumental in identifying security vulnerabilities within source code during various stages of the SDLC. This proactive approach reduces the risk of exploitation and minimizes the impact of potential breaches. By integrating AI tools throughout the development process, organizations can shift from a reactive to a preventative security model.

AI-Powered Static and Dynamic Code Analysis

AI algorithms excel at analyzing source code for potential security flaws. Static analysis examines code without executing it, identifying vulnerabilities based on coding patterns and known weaknesses. Dynamic analysis, on the other hand, involves executing the code and observing its behavior to detect runtime vulnerabilities. AI enhances both techniques by learning from vast datasets of known vulnerabilities and identifying subtle patterns that might evade traditional methods. For instance, AI can identify potential SQL injection vulnerabilities by analyzing code that interacts with databases, even if the code is obfuscated or uses unconventional methods. This allows developers to address security risks before deployment, significantly reducing the attack surface.

AI-Driven Automated Security Testing

Automated security testing is a crucial aspect of modern software development. AI significantly boosts the effectiveness of these tests by automating the process of identifying and prioritizing potential vulnerabilities. AI-powered tools can generate test cases based on the code’s structure and functionality, covering a wider range of scenarios than traditional methods. Furthermore, AI can analyze the results of these tests, identifying the most critical vulnerabilities and prioritizing them for remediation. This allows development teams to focus their efforts on the most pressing security issues, improving efficiency and reducing overall risk. For example, an AI-powered fuzzing tool can automatically generate a vast number of inputs to test the robustness of a system, identifying vulnerabilities that might be missed by manual testing.

AI’s Role in Fostering Secure Coding Practices

AI can play a crucial role in educating developers and promoting secure coding practices. AI-powered tools can provide real-time feedback to developers as they write code, highlighting potential vulnerabilities and suggesting best practices. This continuous feedback loop helps developers learn secure coding techniques and reduces the likelihood of introducing vulnerabilities in the first place. For example, an AI-powered code editor could highlight lines of code that are vulnerable to buffer overflow attacks and suggest safer alternatives. Furthermore, AI can analyze codebases to identify areas where secure coding practices are consistently violated, providing insights for improving the overall security posture of the organization’s software development process.

AI Tools for Secure Software Development

The adoption of AI in secure software development is growing rapidly, leading to the emergence of several powerful tools. These tools offer a range of functionalities, from static and dynamic code analysis to automated security testing and vulnerability management. It’s important to note that the effectiveness of these tools depends on factors such as the quality of the training data and the specific needs of the organization.

- SonarQube: A platform for continuous inspection of code quality and security, leveraging AI for vulnerability detection and code analysis.

- Snyk: An AI-powered platform for detecting and fixing vulnerabilities in open-source and proprietary code.

- GitLab Security: Integrated security features within the GitLab platform, including static and dynamic application security testing powered by AI.

- DeepCode: (now integrated into Snyk) Used AI to analyze code for security flaws and suggest improvements.

Comparison of AI-Based Approaches for Securing Software

Different AI approaches, such as machine learning and deep learning, are used for securing software. Machine learning models, often using techniques like Support Vector Machines (SVMs) or Random Forests, can be trained to identify known vulnerabilities based on features extracted from the code. Deep learning models, such as Recurrent Neural Networks (RNNs) or Convolutional Neural Networks (CNNs), can learn more complex patterns and potentially identify zero-day vulnerabilities that traditional methods might miss. The choice of approach depends on factors such as the size and complexity of the codebase, the types of vulnerabilities being targeted, and the availability of training data. Deep learning models generally require more data and computational resources but can offer higher accuracy in identifying complex vulnerabilities. Machine learning models might be more suitable for simpler tasks or when data is limited.

Outcome Summary

In a digital world facing relentless cyber threats, the integration of Artificial Intelligence in cybersecurity isn’t just an advantage—it’s a necessity. While challenges remain, the potential of AI to proactively prevent attacks, rather than simply react to them, is undeniable. By leveraging AI’s predictive capabilities and automation potential, we can significantly strengthen our defenses and build a more secure digital future. The journey is ongoing, but the destination – a safer, more resilient cyberspace – is worth the effort.