How AI is Revolutionizing Human-Computer Interaction? Forget clunky interfaces and frustrating tech. AI’s not just improving our gadgets; it’s fundamentally changing how we *interact* with them. We’re talking seamless voice assistants anticipating your needs, personalized experiences that adapt to your every whim, and interfaces so intuitive, even your grandma could master them (almost).

This isn’t science fiction; it’s the present and future of technology. From natural language processing making computers understand us better to AI-powered personalization creating truly tailored digital experiences, the revolution is already here. We’ll dive deep into how AI is reshaping everything from healthcare apps to educational platforms, exploring the ethical considerations and predicting the future of human-computer interaction.

AI-Powered Natural Language Processing in HCI: How AI Is Revolutionizing Human-Computer Interaction

Source: cbinsights.com

Natural Language Processing (NLP) is rapidly transforming how we interact with computers, moving us beyond clunky interfaces and into a world of intuitive, conversational computing. Advancements in NLP are making human-computer interaction more natural and efficient, blurring the lines between human communication and machine understanding. This shift is driven by increasingly sophisticated algorithms that enable computers to not only process human language but also understand its nuances, context, and intent.

NLP’s Impact on User Experience

The integration of NLP into various domains significantly enhances user experience. Voice assistants like Siri and Alexa, for instance, rely heavily on NLP to understand spoken commands and respond appropriately. This eliminates the need for manual typing, making interaction faster and more convenient, especially for tasks requiring hands-free operation. Similarly, chatbots powered by NLP are revolutionizing customer service, providing instant support and resolving queries efficiently. In healthcare, NLP helps analyze patient records and medical literature, aiding diagnosis and treatment planning. In education, it personalizes learning experiences by adapting to individual student needs and providing customized feedback.

Comparative Analysis of NLP Techniques in HCI

Several NLP techniques contribute to improved HCI. Rule-based systems, relying on predefined grammar rules and dictionaries, are simple to implement but struggle with the complexities of natural language. Statistical methods, leveraging large datasets to identify patterns and probabilities, offer greater flexibility but can be computationally expensive and require significant training data. Deep learning models, particularly recurrent neural networks (RNNs) and transformers, have emerged as powerful tools capable of handling nuanced language and context, achieving state-of-the-art performance in tasks like sentiment analysis and machine translation. However, these models often require extensive computational resources and can be prone to biases present in their training data. The choice of technique depends on the specific application and the available resources. For example, a simple chatbot might utilize a rule-based system, while a sophisticated virtual assistant would likely employ deep learning models.

NLP Revolutionizing Healthcare HCI: A Hypothetical Scenario, How AI is Revolutionizing Human-Computer Interaction

Imagine a future where a patient with a chronic illness interacts with a sophisticated AI-powered healthcare assistant. This assistant, leveraging advanced NLP, can understand the patient’s symptoms described in natural language – “I’ve been feeling really tired lately, and my chest feels tight” – analyze this information against the patient’s medical history and current medication, and identify potential issues. The system could then proactively suggest adjustments to the treatment plan or recommend a consultation with a specialist, all through a natural, conversational interface. This eliminates the need for complex forms and ensures timely and personalized care, potentially improving patient outcomes and reducing the burden on healthcare professionals. This hypothetical scenario showcases the potential of NLP to transform healthcare, moving towards a more proactive and patient-centric approach.

AI-Driven Personalization and Adaptive Interfaces

AI is no longer a futuristic fantasy; it’s actively reshaping how we interact with technology. One of the most significant transformations is the rise of personalized and adaptive interfaces, powered by sophisticated AI algorithms. These systems learn user preferences and behaviors, dynamically adjusting the user experience to optimize efficiency, engagement, and satisfaction. This shift marks a move away from the one-size-fits-all approach of traditional interfaces towards a more human-centered design philosophy.

Personalization Based on User Preferences and Behaviors

AI algorithms analyze vast amounts of user data – browsing history, purchase patterns, content consumption, and even input methods – to build detailed user profiles. This data fuels personalized recommendations, customized content feeds, and tailored interface layouts. For example, a news aggregator might prioritize articles aligning with a user’s previously expressed political leanings or preferred news sources. Similarly, an e-commerce site might showcase products based on past purchases and browsing behavior, anticipating future needs and desires. Machine learning models, particularly recommendation engines and collaborative filtering techniques, are crucial in achieving this level of personalization. These algorithms constantly learn and adapt, refining their predictions over time to provide increasingly accurate and relevant suggestions.

Adaptive Interfaces Responding to User Needs and Contexts

Adaptive interfaces go beyond simple personalization; they dynamically adjust their functionality and presentation based on the user’s current needs and the surrounding context. Consider a mobile app that automatically switches to a larger font size in low-light conditions or a navigation system that reroutes based on real-time traffic updates. These adjustments aren’t pre-programmed; they’re driven by AI algorithms that monitor user behavior, environmental factors (like location and time of day), and device capabilities. Contextual awareness is key, enabling the interface to seamlessly adapt to various situations and user states. For instance, a fitness tracker might provide different workout suggestions depending on the user’s current fitness level and available time. The underlying technology often involves a combination of machine learning, natural language processing, and sensor data integration.

Ethical Considerations of Personalized HCI Systems

While AI-driven personalization offers numerous benefits, it also raises significant ethical concerns, primarily revolving around privacy and bias. The collection and use of vast amounts of personal data to personalize the user experience raise questions about data security and user consent. Concerns about data breaches and the potential for misuse of personal information are paramount. Furthermore, the algorithms used in personalized systems can perpetuate and even amplify existing societal biases. If the training data reflects biases present in society, the AI system will likely inherit and reproduce these biases in its recommendations and interactions, potentially leading to unfair or discriminatory outcomes. For example, a loan application system trained on biased data might unfairly deny loans to certain demographic groups. Mitigating these risks requires careful consideration of data privacy regulations, algorithmic transparency, and ongoing monitoring for bias in AI systems.

Comparison of Adaptive Interface Design Approaches

| Approach | Pros | Cons | Example |

|---|---|---|---|

| Rule-based systems | Simple to implement, easy to understand | Limited adaptability, inflexible to unexpected situations | A website that changes its layout based on screen size |

| Machine learning-based systems | Highly adaptable, learns from user behavior | Requires large datasets, potential for bias | A personalized news feed that adapts to user preferences |

| Hybrid approaches | Combines benefits of rule-based and machine learning | More complex to implement | A voice assistant that uses rule-based responses for simple commands and machine learning for complex requests |

| Reinforcement learning-based systems | Optimizes user experience through trial and error | Requires significant computational resources, difficult to debug | A game AI that adapts its difficulty level based on player performance |

AI in the Development of Intuitive and Accessible Interfaces

Source: cloudfront.net

AI’s impact on human-computer interaction is massive, streamlining everything from communication to data analysis. This efficiency extends to the retail sector, where AI is transforming how businesses manage their stock; check out this article on How AI is Optimizing Retail Inventory Management to see how. Ultimately, AI’s ability to predict and adapt is revolutionizing how humans interact with complex systems, leading to smarter, more intuitive experiences.

The quest for seamless human-computer interaction (HCI) has always been a balancing act between functionality and user experience. However, the rise of artificial intelligence is tipping the scales dramatically, enabling the creation of interfaces that are not only intuitive and user-friendly but also remarkably accessible to a wider range of users, including those with disabilities. AI is no longer just a futuristic concept; it’s actively reshaping the very fabric of how we interact with technology.

AI-Powered Accessibility Solutions for Users with Disabilities

Designing interfaces that cater to diverse needs, especially those of users with disabilities, presents significant challenges. Visual impairments require alternative input methods, while motor limitations necessitate adaptive controls. Cognitive differences demand simplified navigation and clear communication. AI offers powerful solutions. For example, AI-powered screen readers can accurately interpret complex on-screen information and convert it into audio descriptions, providing a rich experience for visually impaired users. Similarly, AI can power predictive text and voice-to-text functionalities, enabling users with motor impairments to interact efficiently. Gesture recognition, another AI marvel, allows users with limited dexterity to control interfaces naturally. AI algorithms can also personalize the user experience, adjusting the interface’s complexity and presentation based on the user’s cognitive abilities, creating a more inclusive digital landscape.

AI-Driven Enhancement of Intuitive Interfaces for Complex Applications

Complex applications, such as medical diagnostic tools or financial modeling software, often present steep learning curves. AI can significantly flatten these curves. AI-powered intelligent assistants can guide users through complex workflows, providing contextual help and suggesting optimal actions. Intuitive visualisations, generated by AI, can simplify the presentation of complex data, making it easier for users to grasp key insights. AI can also learn user behavior patterns and adapt the interface dynamically, anticipating user needs and proactively offering relevant information or functionalities. Think of a financial software that anticipates your next action based on your past behavior, automatically pulling up the relevant charts or data. This proactive assistance streamlines the user experience and significantly enhances efficiency.

AI Tools for User Interface Design and Testing

AI is revolutionizing the design and testing phases of interface development. AI-powered design tools can analyze user feedback, identify usability issues, and suggest design improvements. For example, these tools can analyze heatmaps to identify areas of an interface that users frequently ignore or struggle with. They can also generate design variations based on user preferences, helping designers quickly iterate and refine their work. AI-driven testing tools can automate the process of identifying bugs and usability issues, accelerating the development cycle and ensuring a higher-quality final product. Imagine an AI that can automatically generate and run thousands of test cases, flagging potential problems before the application even reaches beta testing. This speeds up the development process and improves the overall quality of the user interface.

Best Practices for Incorporating AI into Accessible and Intuitive Interface Design

Before integrating AI into interface design, it’s crucial to establish clear user needs and goals. Prioritizing accessibility from the outset is essential. This involves choosing AI tools and techniques that are compatible with assistive technologies and ensuring that the AI features enhance, not hinder, accessibility for all users. Thorough user testing and iterative design are also paramount. Regularly collecting user feedback and using it to refine the AI features is critical for ensuring a positive and inclusive user experience. Finally, maintaining transparency and control is crucial. Users should understand how the AI is working and have the option to override AI suggestions when necessary. This ensures that the AI acts as a supportive tool, rather than a controlling entity.

The Role of AI in Enhancing User Experience Through Predictive Capabilities

AI is no longer just reacting to user input; it’s anticipating needs and proactively shaping the interaction. This predictive power, fueled by sophisticated algorithms, is revolutionizing how we experience technology, making it smoother, more efficient, and frankly, more delightful. This predictive capability transforms passive interfaces into proactive assistants, subtly guiding users toward their goals and making technology feel almost intuitive.

Predictive AI algorithms learn user behavior patterns from past interactions. By analyzing data points like browsing history, search queries, app usage, and even typing patterns, these algorithms build detailed user profiles. This allows them to anticipate future actions and offer tailored assistance before the user even explicitly requests it.

Methods of User Behavior Prediction in HCI

Understanding how these predictions are made is key to appreciating their impact. Several methods exist, each with strengths and weaknesses. These approaches often work in tandem to create a comprehensive predictive model.

One common method involves using collaborative filtering. This technique analyzes the behavior of similar users to predict the preferences and actions of a target user. For example, if users with similar music tastes also enjoy a specific podcast, the system might recommend that podcast to the target user. Another technique, content-based filtering, focuses on the characteristics of items themselves. If a user enjoys a certain type of movie, the system will recommend other movies with similar genres, actors, or directors. Finally, sequential pattern mining analyzes the order of user actions over time to predict future actions. This is particularly useful in applications like email clients, where predicting the next recipient based on previous emails sent is highly beneficial.

Examples of Predictive Capabilities Improving User Experience

The practical applications of predictive AI are numerous and impactful. Consider anticipatory text input, a feature now common on smartphones and other devices. As you type, the AI predicts the next word or phrase you’re likely to use, saving you time and effort. Personalized recommendations on streaming services, e-commerce sites, and even news aggregators are another prime example. These systems learn your preferences and proactively suggest content you’re likely to enjoy. Another less obvious but equally impactful example is predictive maintenance in software applications. AI can detect patterns in user behavior indicating potential problems, allowing developers to address issues before they impact the user experience. Imagine a navigation app predicting traffic congestion and proactively suggesting alternative routes, ensuring a smooth and timely journey.

Visual Representation of Predictive AI Enhancing HCI

Imagine a user navigating a complex e-commerce website searching for a specific type of running shoe. Without predictive AI, the user would need to manually filter through countless options based on size, brand, color, and features. With predictive AI, the system observes the user’s initial searches and selections, understanding that they are looking for a specific type of shoe (e.g., trail running shoes, size 10, neutral pronation). As the user navigates, the interface subtly highlights relevant options, intelligently filtering out irrelevant choices. The system might even proactively suggest related products, such as running socks or a hydration pack, based on the user’s selection and past purchasing history. This seamless integration of prediction enhances the overall experience, making the process more efficient and enjoyable. The user’s journey is streamlined, resulting in a quicker and more satisfying purchase experience. This visual representation shows how predictive AI anticipates user needs and proactively guides them through the website, eliminating unnecessary steps and enhancing the overall user experience.

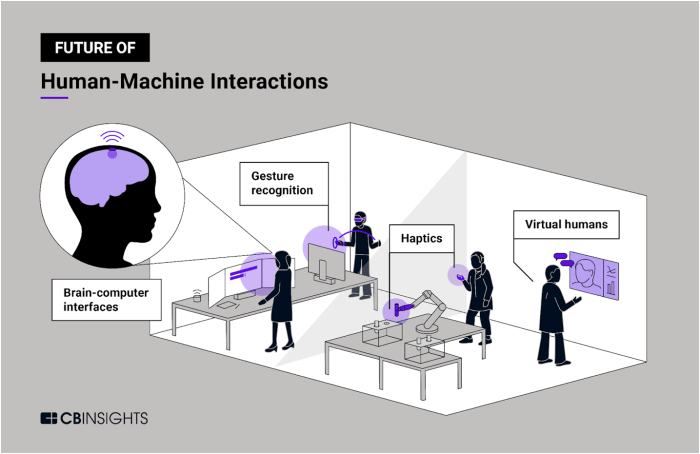

The Impact of AI on the Future of Human-Computer Interaction

AI is poised to fundamentally reshape how we interact with computers, moving beyond the keyboard and mouse to a future where technology anticipates our needs and responds intuitively. This shift promises a more seamless, personalized, and efficient digital experience, but also presents significant societal implications that require careful consideration. The integration of AI into HCI is not merely an incremental improvement; it’s a paradigm shift that will redefine the very nature of human-computer interaction.

Societal Impact of AI-Driven HCI Advancements

The societal impact of AI in HCI will be multifaceted. Increased accessibility for individuals with disabilities is a key benefit. Imagine a world where voice commands and personalized interfaces allow anyone, regardless of physical limitations, to effortlessly navigate digital environments. However, concerns around job displacement due to automation driven by AI-powered systems need to be addressed proactively. Furthermore, ethical considerations surrounding data privacy and algorithmic bias must be carefully navigated to ensure fairness and prevent discrimination. The potential for increased surveillance and manipulation through sophisticated AI interfaces is another critical area demanding careful scrutiny and robust regulatory frameworks. Successfully integrating AI into HCI requires a holistic approach that balances technological advancements with societal well-being.

Emerging Trends in AI and HCI: Augmented and Virtual Reality

Augmented reality (AR) and virtual reality (VR) are experiencing a surge in popularity, and AI is playing a pivotal role in their evolution. AI-powered AR applications can overlay digital information onto the real world, providing context-aware assistance in various fields, from healthcare to manufacturing. Imagine surgeons using AR glasses to visualize a patient’s internal anatomy in real-time during surgery, guided by AI-powered diagnostic tools. Similarly, AI enhances VR by creating more immersive and interactive experiences. AI can generate realistic virtual environments, personalize user interactions, and adapt to individual preferences, leading to applications in training, education, and entertainment that are significantly more effective and engaging. The convergence of AI and these immersive technologies promises a transformative impact across multiple sectors.

Challenges and Opportunities in Integrating AI into HCI

The integration of AI into HCI presents both exciting opportunities and significant challenges. One major hurdle is ensuring the reliability and robustness of AI systems. AI models can be susceptible to errors and biases, leading to unpredictable or even harmful outcomes in HCI applications. Maintaining user privacy and security in AI-driven systems is paramount. Data breaches and misuse of personal information are serious concerns that need to be addressed through robust security measures and transparent data handling practices. On the other hand, AI offers immense potential to create more intuitive, personalized, and accessible interfaces. The ability of AI to learn user preferences and adapt to individual needs can revolutionize user experience, making technology more accessible and efficient for everyone. Balancing the potential benefits with the inherent risks is crucial for responsible AI development and deployment in HCI.

The Future Landscape of HCI: An AI-Driven Vision

The future of HCI will likely be characterized by a seamless blend of physical and digital worlds, driven by advancements in AI. We can envision a future where our devices anticipate our needs, proactively offering assistance and information before we even ask. Interfaces will become more intuitive and personalized, adapting to individual preferences and learning patterns over time. The line between the physical and digital will blur, with AR and VR becoming integral parts of our daily lives. This future will not be without its challenges, requiring careful consideration of ethical, societal, and security implications. However, the potential for AI to create a more inclusive, efficient, and personalized digital experience is undeniable. The key lies in responsible innovation and a commitment to building AI systems that prioritize human well-being and societal progress.

Last Point

The integration of AI into human-computer interaction is not just an upgrade; it’s a paradigm shift. We’ve explored how AI is making technology more intuitive, accessible, and personalized, anticipating our needs and adapting to our individual preferences. While challenges remain – particularly around ethical considerations and potential biases – the future of HCI, powered by AI, promises a world where technology seamlessly integrates into our lives, making them simpler, more efficient, and undeniably more human.