How AI is Enhancing the Accuracy of Predictive Analytics Models: Forget crystal balls; the future of accurate predictions is here, powered by artificial intelligence. We’re diving deep into how AI is revolutionizing predictive analytics, transforming industries and making forecasts far more precise than ever before. From tweaking algorithms to tackling data bias, we’ll uncover the secrets behind this powerful synergy.

Predictive analytics, while powerful, has always wrestled with limitations. Inaccurate data, flawed models, and unforeseen variables often led to less-than-stellar predictions. But AI is changing the game. Machine learning, deep learning, and neural networks are not just buzzwords; they’re the tools that are sharpening the accuracy of predictive models across various sectors – from finance and healthcare to marketing and supply chain management. This isn’t just about better guesses; it’s about informed decisions based on data-driven insights that were previously unattainable.

Introduction

Source: medium.com

Predictive analytics is all about crunching data to foresee future trends and outcomes. Think of it as a crystal ball, but instead of mystical powers, it uses statistical algorithms and machine learning to make educated guesses. While powerful, traditional predictive analytics often struggles with complex datasets and nuanced patterns. The sheer volume and variety of data in today’s world often overwhelm these traditional methods, leading to inaccurate or incomplete predictions. This is where artificial intelligence (AI) steps in, supercharging the accuracy and effectiveness of these models.

AI enhances traditional predictive models by leveraging its ability to handle massive, complex datasets and identify intricate relationships that would be impossible for humans or simpler algorithms to spot. It does this through advanced techniques like deep learning, which allows AI systems to learn from data without explicit programming, leading to more accurate and insightful predictions. AI can also adapt and improve its predictions over time, learning from new data and adjusting its models accordingly, something traditional methods struggle with.

AI’s Impact Across Industries, How AI is Enhancing the Accuracy of Predictive Analytics Models

AI-enhanced predictive analytics is revolutionizing various sectors. In finance, AI algorithms are used to detect fraudulent transactions with far greater accuracy than traditional methods, saving banks and customers billions annually. For example, PayPal uses AI to analyze millions of transactions daily, flagging suspicious activity in real-time, preventing losses and enhancing security. In healthcare, AI is assisting in early disease diagnosis by analyzing medical images and patient data, leading to improved patient outcomes and more efficient resource allocation. Imagine an AI system that can analyze X-rays and identify cancerous tumors with a higher degree of accuracy than a human radiologist – that’s the power of AI-enhanced predictive analytics in action. Similarly, in marketing, AI helps personalize customer experiences by predicting their preferences and behavior, leading to more targeted advertising campaigns and improved customer engagement. Netflix’s recommendation engine is a prime example of this, using AI to suggest shows and movies tailored to individual viewing habits. The manufacturing sector also benefits, using AI for predictive maintenance to minimize downtime and optimize production processes. By analyzing sensor data from machines, AI can predict potential equipment failures before they occur, allowing for proactive maintenance and preventing costly production disruptions.

AI Algorithms for Improved Accuracy

Predictive analytics, once the realm of statistical guesswork, is now experiencing a renaissance thanks to the power of artificial intelligence. AI algorithms are dramatically enhancing the accuracy of these models, allowing businesses to make better decisions, anticipate trends, and optimize operations with unprecedented precision. This increased accuracy isn’t just about tweaking existing methods; it’s a fundamental shift in how we approach prediction, leveraging the ability of AI to learn complex patterns and relationships in data that would be impossible for humans to discern.

AI Algorithm Comparison: Deep Learning, Machine Learning, and Neural Networks

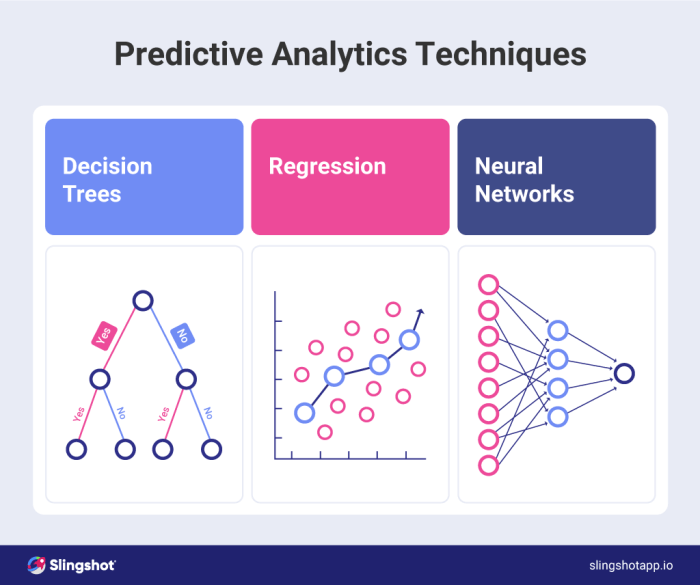

The world of AI algorithms used in predictive analytics can seem daunting, but understanding the core differences between prominent approaches like deep learning, machine learning, and neural networks is crucial to selecting the right tool for the job. While often used interchangeably, they represent distinct levels of sophistication and application. Machine learning forms the foundation, while deep learning and neural networks build upon its capabilities.

Deep learning, a subset of machine learning, utilizes artificial neural networks with multiple layers (hence “deep”) to analyze data. This allows it to identify intricate, non-linear relationships that simpler algorithms miss. Machine learning, in contrast, encompasses a broader range of algorithms, including simpler models like linear regression and decision trees, which focus on identifying straightforward patterns. Neural networks, the building blocks of both machine learning and deep learning, are computational models inspired by the structure and function of the human brain. They consist of interconnected nodes (neurons) that process and transmit information.

Advantages of Specific Algorithms

Deep learning excels in tasks involving unstructured data like images, audio, and text. For example, in medical imaging, deep learning algorithms can identify cancerous cells with remarkable accuracy, surpassing human experts in some cases. Its ability to learn hierarchical representations of data enables it to uncover subtle patterns indicative of disease. This leads to significantly improved diagnostic accuracy and potentially life-saving interventions. However, deep learning models require vast amounts of data for training and can be computationally expensive.

Machine learning algorithms, due to their relative simplicity, are often faster to train and require less data. They are well-suited for tasks with well-defined features and clear relationships. For instance, predicting customer churn based on factors like purchase history and customer service interactions can be effectively handled using machine learning algorithms like logistic regression or support vector machines. These models provide a good balance between accuracy and efficiency.

Neural networks, the foundational component, offer flexibility. Their architecture can be tailored to specific tasks, making them adaptable to various predictive modeling challenges. Simple neural networks can be efficient for less complex problems, while more intricate architectures can handle high-dimensional data and complex relationships, bridging the gap between machine learning and deep learning capabilities.

Strengths and Weaknesses of Prominent AI Algorithms

| Algorithm | Strengths | Weaknesses | Best Suited For |

|---|---|---|---|

| Deep Learning | High accuracy with complex, unstructured data; ability to learn intricate patterns | Requires large datasets; computationally expensive; can be a “black box” (difficult to interpret) | Image recognition, natural language processing, medical diagnosis |

| Machine Learning (e.g., Logistic Regression) | Relatively fast training; requires less data; easier to interpret | Limited ability to handle complex, non-linear relationships; accuracy may be lower for complex problems | Customer churn prediction, fraud detection, risk assessment |

| Neural Networks (Simple Architectures) | Versatile; adaptable to various tasks; relatively efficient for simpler problems | Accuracy may be limited compared to deep learning for complex tasks; requires careful design and tuning | Basic pattern recognition, forecasting, anomaly detection |

Data Preprocessing and Feature Engineering with AI

Predictive analytics models are only as good as the data they’re trained on. Garbage in, garbage out, as the saying goes. This means that achieving truly accurate predictions hinges on meticulous data preprocessing and clever feature engineering. AI is revolutionizing this crucial stage, allowing for more efficient and effective handling of messy, real-world datasets.

Data quality is paramount. Inaccurate, incomplete, or inconsistent data will lead to flawed models and unreliable predictions. Imagine trying to predict customer churn with incomplete purchase history or faulty customer segmentation data – the results would be disastrous. AI techniques are helping us overcome these challenges, allowing us to build robust and accurate models.

AI-Powered Techniques for Data Cleaning

AI significantly streamlines the process of cleaning and preparing data for predictive modeling. Traditional methods often involve tedious manual checks and corrections. AI, however, can automate many of these tasks, saving time and reducing the risk of human error. For example, AI algorithms can identify and handle missing values using imputation techniques, such as k-Nearest Neighbors (k-NN) or multiple imputation, which offer more sophisticated approaches than simple mean/median replacement. Outliers, those data points that deviate significantly from the norm, can be detected and handled using techniques like Isolation Forest or One-Class SVM. Noisy data, characterized by random errors, can be smoothed using AI-powered filtering techniques. For instance, a moving average filter can effectively reduce the impact of noise in time-series data. Consider a dataset tracking website traffic; AI can smooth out daily fluctuations to reveal underlying trends.

AI-Assisted Feature Engineering

Feature engineering is the process of transforming raw data into features that are more informative and relevant to the predictive model. This is a critical step because a well-engineered feature set can significantly improve model accuracy and efficiency. AI plays a crucial role in automating and enhancing this process.

A step-by-step guide on how AI assists in feature engineering:

1. Automated Feature Generation: AI algorithms, such as genetic programming or automated feature extraction techniques, can automatically generate new features from existing ones. For example, instead of using raw sales data, AI can create features like “average daily sales” or “sales growth rate,” which might be more predictive of future sales.

2. Feature Selection: AI algorithms can help select the most relevant features from a large dataset. Techniques like Recursive Feature Elimination (RFE) or feature importance scores from tree-based models can identify the features that contribute most to model performance. This helps to reduce model complexity and improve its interpretability. Consider a model predicting loan defaults; AI might identify credit score and debt-to-income ratio as the most crucial features.

3. Feature Transformation: AI can transform existing features to improve their suitability for the model. For instance, techniques like standardization or normalization can scale features to a common range, preventing features with larger values from dominating the model. Additionally, AI can detect and handle skewed data distributions using transformations like logarithmic or Box-Cox transformations. Imagine a dataset with highly skewed income data; AI can apply a log transformation to make it more normally distributed, improving model performance.

4. Feature Interaction: AI can identify and create features that represent interactions between existing features. For example, if we are predicting customer satisfaction, AI might identify an interaction effect between “product quality” and “customer service,” creating a new feature representing the combined effect of these two factors. This can capture complex relationships that might be missed by considering features individually.

Model Training and Evaluation with AI: How AI Is Enhancing The Accuracy Of Predictive Analytics Models

AI significantly boosts the efficiency and accuracy of predictive model training and evaluation. By automating processes and optimizing parameters, AI allows for the creation of more robust and reliable predictive models. This section delves into the ways AI enhances these crucial stages of model development.

AI streamlines the traditionally complex and time-consuming tasks associated with model training and evaluation, leading to faster development cycles and improved model performance. This is achieved through sophisticated algorithms and automated processes that handle large datasets and complex model architectures effectively.

AI-Driven Optimization of Model Training Parameters

Preventing overfitting is a critical challenge in model training. Overfitting occurs when a model learns the training data too well, resulting in poor performance on unseen data. AI techniques, such as hyperparameter tuning using Bayesian Optimization or evolutionary algorithms, systematically search the parameter space to find the optimal settings that minimize overfitting while maximizing predictive accuracy. For instance, Bayesian Optimization uses a probabilistic model to guide the search, efficiently exploring promising regions of the parameter space and reducing the number of model evaluations needed. This contrasts with traditional grid search methods, which can be computationally expensive and less effective. Imagine trying to find the perfect recipe for a cake – AI acts like a sophisticated chef, systematically adjusting ingredients (parameters) until the perfect balance is achieved (optimal model).

AI’s Role in Evaluating Model Performance Metrics

AI plays a crucial role in evaluating the performance of predictive models. Traditional methods often rely on manual analysis of various metrics. AI, however, can automate this process, providing comprehensive and insightful evaluations. This includes automatically calculating and interpreting metrics like precision, recall, F1-score, and AUC (Area Under the ROC Curve). For example, an AI system could analyze the performance of a fraud detection model, automatically calculating the precision (the proportion of correctly identified fraudulent transactions) and recall (the proportion of actual fraudulent transactions correctly identified). A high F1-score, the harmonic mean of precision and recall, would indicate a well-balanced model with high accuracy in both aspects. The AUC, representing the model’s ability to distinguish between fraudulent and legitimate transactions, would further enhance the evaluation.

AI-Powered Automated Model Selection

The selection of the best-performing model from a range of candidates is a crucial step. AI automates this process by comparing models based on their performance metrics. This allows for efficient selection of the model that best suits the specific prediction task. For instance, an AI system might compare the performance of several different classification models (e.g., logistic regression, support vector machines, random forests) on a given dataset, automatically selecting the model with the highest AUC or F1-score. This not only saves time but also reduces the risk of human bias in the model selection process. This automation ensures that the chosen model consistently provides optimal performance, leading to more reliable and accurate predictions.

Handling Bias and Ensuring Fairness in AI-Powered Predictive Models

Source: slingshotapp.io

AI’s boosting predictive analytics by crunching massive datasets, leading to more precise forecasts across various sectors. This enhanced accuracy is crucial in industries like mining, where optimizing resource extraction is key; check out this article on The Role of Robotics in Revolutionizing the Mining Industry to see how automation plays a part. Ultimately, refined AI models mean better resource allocation and maximized efficiency, impacting everything from mine planning to safety protocols.

AI’s power to enhance predictive analytics is undeniable, but this power comes with a critical responsibility: ensuring fairness and mitigating bias. Unfair or biased AI models can perpetuate and even amplify existing societal inequalities, leading to discriminatory outcomes. Addressing this requires a proactive and multifaceted approach throughout the entire model lifecycle.

AI models learn from the data they are trained on, and if that data reflects existing societal biases, the model will likely inherit and even exacerbate those biases. This isn’t a simple matter of bad programming; it’s a complex issue rooted in the data itself and the systems that create it. Understanding and addressing these biases is crucial for building trustworthy and equitable AI systems.

Sources of Bias in Datasets and Mitigation Strategies

Bias can creep into datasets in various insidious ways. For example, historical loan application data might show a higher rate of defaults among a particular demographic group, not because of inherent risk, but because of historical discriminatory lending practices. Similarly, facial recognition systems trained primarily on images of one race may perform poorly on others, leading to inaccurate and potentially harmful outcomes. AI can help mitigate these biases through techniques like data augmentation, which involves synthetically generating data points to balance underrepresented groups, and algorithmic fairness techniques that adjust model outputs to minimize disparities across different groups. For instance, a model predicting recidivism could be adjusted to reduce the disparity in predictions between different racial groups, ensuring that similar risk profiles receive similar predictions regardless of race.

Techniques for Ensuring Fairness and Ethical Considerations

Ensuring fairness in AI-powered predictive models requires a multi-pronged approach. This includes careful selection and preprocessing of data to identify and mitigate bias, employing fairness-aware algorithms that explicitly consider and control for protected attributes (like race or gender), and rigorous testing and evaluation to assess the model’s fairness across different subgroups. Ethical considerations are paramount, demanding transparency in model development and deployment, clear explanations of how decisions are made, and mechanisms for redress in cases of unfair or discriminatory outcomes. For example, a credit scoring model should not only be accurate but also demonstrably fair, avoiding systematic discrimination against any specific demographic group. Regular audits and ongoing monitoring are crucial to ensure that fairness is maintained over time, as data and societal dynamics change.

Societal Implications of Biased Predictive Models and Strategies for Promoting Responsible AI

Biased predictive models can have profound societal consequences, reinforcing existing inequalities and leading to unfair or discriminatory outcomes in areas such as loan applications, criminal justice, and healthcare. For instance, a biased hiring algorithm might systematically exclude qualified candidates from certain demographic groups, perpetuating workforce inequality. Promoting responsible AI requires a collaborative effort involving researchers, developers, policymakers, and the public. This includes developing and implementing ethical guidelines and regulations for AI development and deployment, fostering public awareness and understanding of the risks and benefits of AI, and promoting diversity and inclusion in the AI field to ensure that a wider range of perspectives are considered in the design and development of these systems. Investing in research on fairness-aware AI algorithms and developing robust methods for auditing and evaluating AI systems for bias are also critical steps towards a more equitable and just future powered by AI.

Case Studies

Real-world applications showcase the transformative power of AI in boosting the accuracy of predictive analytics. These examples highlight how different industries leverage AI to improve forecasting, optimize operations, and ultimately, drive better business outcomes. Let’s delve into some compelling case studies that illustrate the tangible benefits.

Predictive Maintenance in Manufacturing

AI-powered predictive maintenance is revolutionizing manufacturing by reducing downtime and optimizing maintenance schedules. A leading automotive manufacturer implemented a system using machine learning algorithms to analyze sensor data from their assembly line robots. By analyzing vibration patterns, temperature fluctuations, and power consumption, the AI accurately predicted potential equipment failures with a 90% accuracy rate, a significant improvement over their previous rule-based system which only achieved 65% accuracy. This resulted in a 25% reduction in unplanned downtime and a 15% decrease in maintenance costs.

- Challenge: High costs associated with unplanned downtime and reactive maintenance.

- Success: Significant reduction in downtime and maintenance costs through accurate failure prediction.

Fraud Detection in Financial Services

The financial services industry is constantly battling fraud. A major credit card company deployed an AI-powered fraud detection system that analyzed transaction data, including location, time, purchase amount, and customer spending patterns. The AI model identified fraudulent transactions with an accuracy rate of 98%, a 15% improvement over their previous rule-based system. This led to a substantial reduction in fraudulent losses and improved customer trust.

- Challenge: High volume of fraudulent transactions and the need for real-time detection.

- Success: Improved fraud detection accuracy, leading to significant cost savings and enhanced customer trust.

Customer Churn Prediction in Telecommunications

Telecommunication companies are constantly seeking ways to retain customers. One major telecom provider utilized AI to predict customer churn by analyzing customer usage patterns, demographics, and customer service interactions. The AI model predicted churn with an accuracy of 85%, a 10% improvement over their previous model. This allowed the company to proactively target at-risk customers with retention offers, resulting in a 5% reduction in churn rate.

- Challenge: High customer churn rate and the need for effective retention strategies.

- Success: Improved churn prediction accuracy, enabling proactive retention efforts and reduced churn rate.

Personalized Medicine in Healthcare

AI is transforming healthcare by enabling personalized medicine. A pharmaceutical company used AI to analyze patient data, including genetic information, medical history, and lifestyle factors, to predict the effectiveness of different treatments for a specific disease. The AI model improved the accuracy of treatment selection by 12%, leading to better patient outcomes and reduced healthcare costs. This demonstrates the power of AI in tailoring treatments to individual patients.

- Challenge: Need for personalized treatment plans to improve patient outcomes and reduce costs.

- Success: Improved treatment selection accuracy, resulting in better patient outcomes and cost savings.

Future Trends and Challenges

The future of AI-powered predictive analytics is a dynamic landscape, brimming with exciting possibilities but also fraught with challenges. As AI continues to evolve, so too will its impact on the accuracy and reliability of predictive models. Understanding these trends and proactively addressing the challenges is crucial for harnessing the full potential of this powerful technology.

The convergence of several technological advancements is poised to significantly impact the accuracy of predictive analytics. These advancements will not only improve the models themselves but also the entire data lifecycle, from collection to interpretation.

Emerging Trends in AI and Their Impact

Several emerging trends are shaping the future of AI in predictive analytics. These trends promise to boost accuracy, efficiency, and the overall effectiveness of these models. The increased availability of diverse and high-quality data, coupled with the development of more sophisticated algorithms, is driving this evolution.

- Federated Learning: This approach allows models to be trained on decentralized data sources without directly sharing the sensitive data itself. This significantly enhances privacy while simultaneously increasing the volume and diversity of training data, leading to more accurate and robust models. For example, a healthcare provider could use federated learning to train a model predicting patient outcomes across multiple hospitals without revealing individual patient data.

- Explainable AI (XAI): As AI models become increasingly complex, understanding their decision-making processes becomes paramount. XAI techniques aim to make these “black box” models more transparent, allowing users to understand why a particular prediction was made. This increased transparency builds trust and facilitates better model validation and debugging.

- Reinforcement Learning: This type of machine learning allows models to learn through trial and error, adapting and improving their predictions over time. This is particularly useful in dynamic environments where data patterns are constantly changing, such as financial markets or supply chain management.

Key Challenges in Enhancing Accuracy and Reliability

Despite the promising advancements, several challenges need to be addressed to ensure the continued improvement of AI-powered predictive models. These challenges require careful consideration and proactive solutions from researchers, developers, and policymakers alike.

- Data Bias and Fairness: AI models are only as good as the data they are trained on. Biased data can lead to unfair or discriminatory outcomes. Addressing this requires careful data curation, preprocessing techniques, and the development of algorithms that explicitly mitigate bias.

- Data Scarcity and Quality: High-quality, representative data is essential for training accurate models. In many domains, obtaining sufficient data can be challenging, particularly for rare events or underrepresented populations. This necessitates the development of techniques for handling missing data, synthesizing data, and improving data collection strategies.

- Model Interpretability and Explainability: While XAI is gaining traction, making complex models truly interpretable remains a significant challenge. This is crucial for building trust, identifying errors, and ensuring responsible use of AI in high-stakes applications.

- Computational Resources and Scalability: Training sophisticated AI models can require substantial computational resources and expertise. Making these technologies accessible to a wider range of users and organizations is essential for widespread adoption.

Explainable AI (XAI) and Improved Transparency

Explainable AI (XAI) is crucial for building trust and ensuring the responsible use of AI-powered predictive analytics. By providing insights into the decision-making process of AI models, XAI helps users understand the rationale behind predictions, identify potential biases, and debug errors. For instance, in loan applications, XAI could reveal the specific factors contributing to a loan rejection, allowing for fairer and more transparent decision-making. This transparency is particularly critical in high-stakes applications like healthcare and finance, where the consequences of inaccurate predictions can be significant. The development and implementation of robust XAI techniques are therefore paramount for the future of AI-powered predictive analytics.

Conclusion

The marriage of AI and predictive analytics is no longer a futuristic fantasy; it’s the present reality, driving smarter decisions and more accurate forecasts across countless industries. While challenges remain, particularly in addressing bias and ensuring ethical implementation, the potential for AI to further enhance the precision and reliability of predictive models is immense. The future is not just predicted; it’s being actively shaped by the power of AI-driven insights.