How AI is Changing the Future of Music Production and Composition? Forget cheesy elevator music – AI’s shaking up the industry, from composing entire symphonies to mastering your latest bedroom track. We’re diving deep into the algorithms, exploring how artificial intelligence is not just assisting, but fundamentally altering the way music is created, produced, and even experienced. Get ready for a sonic revolution.

This isn’t about robots replacing human artists; it’s about a powerful new toolset expanding creative possibilities. Imagine AI generating unique soundscapes, accelerating the production process, and even helping musicians collaborate across continents. But this technological leap also raises questions about copyright, artistic ownership, and the very definition of musical creativity. We’ll unpack it all, exploring the potential, the pitfalls, and the exciting future of AI in music.

AI-Powered Music Composition Tools: How AI Is Changing The Future Of Music Production And Composition

Source: musicconnect.blog

The music industry is undergoing a seismic shift, thanks to the rapid advancements in artificial intelligence. AI is no longer a futuristic fantasy; it’s a tangible tool reshaping how music is created, composed, and experienced. From generating entire melodies to assisting with intricate arrangements, AI-powered tools are empowering musicians of all skill levels to explore new creative avenues. This section delves into the functionalities and impact of these innovative tools.

AI music composition tools leverage various algorithms to generate musical pieces. These tools range from simple melody generators to sophisticated systems capable of creating entire compositions with complex harmonies and arrangements. The level of user interaction varies widely, with some tools offering complete creative control while others operate more autonomously. Understanding the underlying technologies and creative workflows is key to harnessing their potential.

AI Music Composition Tool Functionalities

Several prominent AI music composition tools are making waves in the industry. Amper Music, for instance, allows users to specify parameters like genre, mood, and instrumentation to generate custom music for various applications, such as video games or advertising. Jukebox, developed by OpenAI, is known for its ability to generate music in diverse styles, mimicking the sounds of specific artists. AIVA (Artificial Intelligence Virtual Artist) focuses on composing music for film and video games, offering a more tailored approach for professional productions. These tools differ significantly in their capabilities and user interfaces, offering a spectrum of options for musicians with varying levels of technical expertise. Some offer simple drag-and-drop interfaces, while others require more in-depth knowledge of music theory and programming.

Comparison of AI Composition Approaches

Different AI composition tools employ distinct approaches. Markov chains, for example, predict the next musical event based on the probability of its occurrence given the preceding events. This method is relatively simple but can result in repetitive or predictable outputs. Neural networks, on the other hand, learn complex patterns from vast datasets of music, allowing them to generate more creative and nuanced compositions. Generative adversarial networks (GANs) take this a step further by using two neural networks—a generator and a discriminator—to compete and refine the generated music, resulting in higher-quality and more original outputs. Each approach has its strengths and limitations, influencing the style and complexity of the generated music. For instance, Markov chains are well-suited for creating simple background music, while GANs are better suited for generating complex and original compositions.

The Creative Process with AI Composition Tools

The creative process when using AI composition tools is a collaborative one. Musicians aren’t simply passive consumers of AI-generated music; they actively shape and refine the output. The process often begins with defining the desired style, mood, and instrumentation. The musician then interacts with the AI tool, providing input and iteratively refining the generated music. This could involve adjusting parameters, selecting specific sections, or even manually editing the generated output. The level of user control varies significantly depending on the tool and the musician’s technical expertise. Some tools offer a high degree of control, allowing musicians to fine-tune every aspect of the composition, while others provide more of a starting point that the musician then develops further. The key is to view the AI as a collaborator, not a replacement, for the human artist.

Hypothetical Workflow for a Musician Using AI Composition Tools

| Stage | Action | AI Tool Used | Expected Outcome |

|---|---|---|---|

| Idea Generation | Define desired genre, mood, and instrumentation for a new track. | Amper Music | Initial musical sketch reflecting the specified parameters. |

| Melody Development | Refine the generated melody, adjusting rhythm and pitch using the tool’s editing features. | AIVA | Improved melody with enhanced musicality and emotional impact. |

| Harmony and Arrangement | Experiment with different harmonic progressions and instrumental arrangements using the AI tool’s suggestions. | Jukebox | A more complex and layered musical arrangement. |

| Finalization and Polishing | Manually edit and refine the composition, adding final touches and ensuring overall coherence. | Digital Audio Workstation (DAW) | A polished and finalized musical piece ready for recording and mixing. |

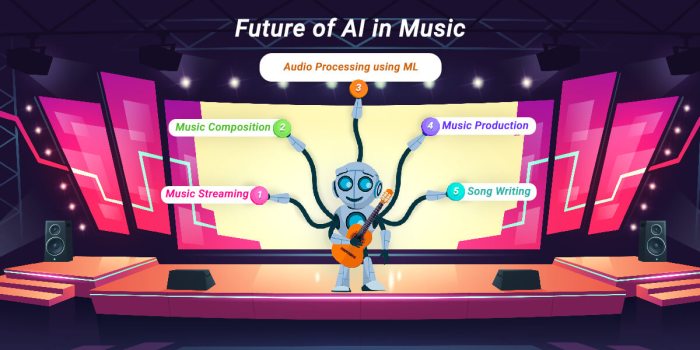

AI’s Impact on Music Production Techniques

Source: aiworldschool.com

AI’s impact on music is huge, automating tasks like mixing and mastering, freeing artists to focus on creativity. This efficiency boost mirrors advancements in other fields; check out how AI is revolutionizing workplace safety and efficiency, as detailed in this insightful article: The Role of AI in Enhancing Workplace Safety and Efficiency. Ultimately, AI’s ability to streamline processes is transforming both the music industry and countless others, boosting productivity and innovation across the board.

The music industry, traditionally a realm of human artistry and painstaking craftsmanship, is undergoing a seismic shift thanks to artificial intelligence. AI is no longer just a futuristic fantasy; it’s actively reshaping the way music is produced, from the initial spark of an idea to the final polished master. This transformation impacts not only composition, as we’ve already discussed, but also the intricate processes of mixing, mastering, and sound design.

AI is revolutionizing traditional music production techniques, offering both unprecedented opportunities and intriguing challenges. Tools leveraging machine learning are automating tasks previously requiring years of experience, while simultaneously opening doors to entirely new sonic landscapes. This blend of automation and creative expansion is fundamentally altering the workflow for producers and engineers.

AI-Powered Mixing and Mastering

AI-powered mixing and mastering plugins are becoming increasingly sophisticated, offering features that streamline the process and, in some cases, even improve the final product. These tools utilize algorithms to analyze audio tracks, identify problematic frequencies, and automatically adjust levels, EQ, and compression. This automation saves considerable time and effort, allowing producers to focus more on creative aspects of their work. However, the reliance on AI also raises concerns about the loss of artistic control and the potential homogenization of sound. While AI can certainly enhance a mix, it’s crucial for producers to retain a critical ear and fine-tune the results to maintain their unique artistic signature.

AI in Audio Restoration and Enhancement

AI excels in tasks like audio restoration, noise reduction, and vocal tuning. For instance, algorithms can effectively remove background hiss and crackle from old recordings, preserving historical audio artifacts. Similarly, AI-powered vocal tuning tools offer precise pitch correction and can even subtly alter vocal timbre to achieve specific effects. The advantages are clear: higher-quality recordings with less manual effort. However, over-reliance on these tools can lead to a sterile, unnatural sound. The delicate balance between enhancing a recording and preserving its character remains a critical challenge. For example, AI might flawlessly remove background noise, but it could also inadvertently eliminate subtle nuances that contribute to the recording’s overall charm and authenticity.

AI and Innovative Sound Design

AI is not just automating existing processes; it’s also enabling the creation of entirely new sounds and textures. By training AI models on vast datasets of existing sounds, producers can generate unique sonic landscapes that would be difficult or impossible to achieve through traditional methods. This opens up exciting possibilities for experimental music and sound design, allowing artists to push the boundaries of sonic exploration. For instance, AI can be used to generate unique synth patches, create complex rhythmic patterns, and even compose entire musical sections. The creative potential is immense, but requires producers to develop new workflows and approaches to integrate these technologies effectively.

Comparative Analysis of AI-Powered Mixing Plugins

| Plugin Name | Features | Strengths | Weaknesses |

|---|---|---|---|

| Plugin A (Example) | Automated mixing, EQ, compression, mastering | Fast and efficient workflow, good for beginners | Can sound overly processed, limited customization options |

| Plugin B (Example) | AI-powered spectral analysis, dynamic processing, detailed control | Precise control, high-quality results, suitable for experienced users | Steeper learning curve, computationally intensive |

| Plugin C (Example) | Genre-specific presets, real-time mixing, collaboration features | User-friendly interface, versatile for different genres, facilitates collaboration | Presets may limit creative freedom, potential for subscription fees |

The Role of AI in Music Distribution and Marketing

The music industry, traditionally reliant on gut feeling and established networks, is undergoing a seismic shift thanks to artificial intelligence. AI is no longer just a futuristic concept; it’s actively reshaping how music is discovered, shared, and promoted, offering both exciting opportunities and complex ethical challenges. This section delves into the multifaceted role of AI in music distribution and marketing, exploring its impact on platforms, personalization, and targeted campaigns.

AI is fundamentally altering music distribution platforms and strategies. Streaming services like Spotify and Apple Music heavily leverage AI algorithms to curate personalized playlists and recommendations, directly impacting artist discovery and listener engagement. These algorithms analyze listening habits, preferences, and even contextual data (like time of day or location) to suggest songs and artists a user might enjoy, effectively bypassing traditional gatekeepers and democratizing access to a wider range of music. This algorithmic curation, however, presents a double-edged sword; while increasing exposure for niche artists, it also creates potential biases and limitations in the types of music promoted.

AI-Driven Personalization of the Music Listening Experience

AI algorithms are revolutionizing how users consume music. Beyond simple recommendations, these systems analyze vast datasets to understand individual musical tastes with surprising accuracy. For example, an algorithm might identify a listener’s preference for specific instruments, tempos, or lyrical themes, leading to highly targeted suggestions that go beyond simple genre classifications. This personalized experience fosters greater user engagement and loyalty, keeping listeners hooked within the platform’s ecosystem. Imagine a scenario where an AI system recognizes a user’s shift in mood based on their listening history and proactively offers a calming playlist to counter stress. This level of personalized interaction is transforming the passive act of listening into a dynamic and responsive experience.

AI in Music Marketing and Promotion

AI is transforming music marketing from broad-brush approaches to highly targeted campaigns. By analyzing user data, AI can identify specific demographics and listening preferences to create highly effective advertising strategies. This means artists and labels can focus their resources on reaching audiences most likely to engage with their music, optimizing marketing budgets and maximizing ROI. Furthermore, AI is automating the creation of promotional materials, such as social media posts and targeted ads, adapting the message to resonate with specific user segments. This precision marketing significantly reduces the guesswork inherent in traditional promotion methods, leading to more efficient and effective campaigns. For example, an AI system might identify a specific demographic group particularly receptive to a particular song and tailor social media ads to that group, maximizing engagement and reach. Moreover, AI is used in creating playlists tailored to specific marketing campaigns, ensuring that promoted songs are integrated seamlessly into the listening experience.

Ethical Concerns Regarding AI in Music Distribution and Marketing

The use of AI in music distribution and marketing raises several significant ethical concerns that need careful consideration.

- Bias and Discrimination: AI algorithms are trained on existing data, which may reflect existing biases in the music industry. This can lead to underrepresentation of certain artists or genres, perpetuating inequalities.

- Lack of Transparency: The inner workings of many AI algorithms are opaque, making it difficult to understand how decisions are made about music recommendations or advertising targeting. This lack of transparency can lead to unfair or discriminatory outcomes.

- Data Privacy: The use of AI in music marketing relies on collecting and analyzing vast amounts of user data, raising concerns about privacy and data security. How this data is used, stored, and protected needs rigorous ethical oversight.

- Artist Exploitation: The algorithmic nature of music discovery could lead to situations where artists are unfairly disadvantaged, with their music buried by the system or overshadowed by more commercially successful artists.

- Monopolistic Practices: The power of AI-driven platforms to shape music consumption could lead to monopolistic practices, limiting artist choice and potentially stifling creativity and innovation.

AI and the Future of Musical Collaboration

Imagine a world where geographical boundaries cease to be a barrier for musical collaboration. AI is poised to revolutionize how musicians connect and create, transcending limitations of distance, language, and even musical expertise. It’s not about replacing human artists, but augmenting their capabilities and fostering unprecedented creative partnerships.

AI facilitates collaborations between musicians from different locations and backgrounds by providing seamless real-time interaction tools. Imagine musicians in Tokyo, Rio, and London jamming together, each contributing their unique style, all synchronized and coordinated through an AI-powered platform. This platform could translate musical ideas between different instruments and styles, allowing for a richer, more diverse sonic tapestry than ever before possible. Furthermore, AI could translate musical notation and verbal descriptions, breaking down language barriers that often hinder international collaborations.

AI as a Collaborative Partner in Musical Creation

AI systems can act as active participants in the creative process, not merely as tools. They can generate musical ideas, suggest harmonies, offer rhythmic variations, and even compose entire sections of a piece based on the human composer’s input. This collaborative approach could lead to unexpected musical discoveries and push the boundaries of artistic expression. For example, an AI might analyze a composer’s existing work to identify recurring melodic motifs and suggest variations or new developments, thereby inspiring the composer to explore uncharted territories within their own style. In other instances, an AI might suggest entirely new instrumental combinations or textures based on its analysis of existing musical genres.

The Impact of AI on the Role of Human Musicians and Composers

The integration of AI in music creation does not diminish the role of human musicians and composers; rather, it transforms it. Instead of being solely responsible for every aspect of a musical piece, human artists can focus on higher-level creative decisions, such as conceptualization, emotional expression, and artistic direction. AI handles the technical aspects, freeing up the human artist to delve deeper into the artistic vision. This shift allows for greater experimentation and exploration, potentially leading to a renaissance in musical innovation. The human artist remains the heart and soul of the creation, guiding the AI, shaping its output, and infusing the music with their unique personality and emotional depth.

A Fictional Collaboration: “Echoes of the Digital Dawn”, How AI is Changing the Future of Music Production and Composition

Imagine Anya Petrova, a renowned classical composer known for her melancholic string quartets, collaborating with “Muse,” a sophisticated AI music system. Anya inputs a basic melodic phrase, expressing a sense of longing and introspection. Muse analyzes the phrase, suggesting harmonic progressions and rhythmic variations that reflect Anya’s style but add unexpected twists. Anya then provides textual descriptions of the emotions she wants to evoke in each section—despair, hope, acceptance—and Muse generates corresponding musical passages. The resulting piece, “Echoes of the Digital Dawn,” blends the elegance of classical string instruments with the ethereal textures generated by Muse. The music incorporates unconventional sounds, created by the AI, that evoke a sense of technological wonder while retaining the emotional core provided by Anya’s human touch. The instrumentation features traditional string quartets, augmented by synthesized soundscapes that subtly shift and evolve throughout the piece, creating a unique and captivating soundscape.

AI-Generated Music and Copyright Issues

The rise of AI in music production has ushered in a new era of creative possibilities, but it’s also thrown a wrench into the well-established mechanisms of copyright law. Suddenly, we’re grappling with questions of authorship, ownership, and the very definition of creativity when a machine is involved. The legal landscape is struggling to keep pace, leaving a fertile ground for uncertainty and potential disputes.

The core problem lies in the fact that current copyright law is largely built around human authorship. Existing frameworks are ill-equipped to handle scenarios where the “creator” is an algorithm trained on vast datasets of existing music. This creates a complex web of ethical and legal challenges that need careful consideration.

Legal Frameworks and Their Limitations

Current copyright law generally grants protection to works of authorship fixed in a tangible medium of expression. However, determining authorship when AI is involved is problematic. Is the copyright held by the programmer who created the AI? The user who inputted the parameters? Or does the AI itself somehow possess authorship? Many jurisdictions lack clear legal precedents, leading to significant uncertainty. For example, the US Copyright Office has explicitly stated that works generated solely by AI are not eligible for copyright protection. This stance highlights the limitations of existing frameworks in adapting to this rapidly evolving technology. This leaves many creators in a precarious position, unsure of their rights and protections. There’s a clear need for legal frameworks to evolve and provide clarity.

Potential Legal Disputes

The lack of clear legal frameworks opens the door to a range of potential legal disputes. Imagine a scenario where an AI, trained on a vast library of copyrighted music, generates a song that incorporates elements strikingly similar to a protected work. Determining infringement would be incredibly complex. Further, consider the potential for disputes between the AI developer, the user who prompted the AI, and the copyright holders of the original works used in the AI’s training data. These scenarios highlight the urgent need for clear guidelines and legal precedents to prevent a flood of costly and time-consuming litigation. The potential for these kinds of conflicts underscores the necessity for proactive legal intervention.

Different Approaches to Copyright and Their Impact

Several approaches to copyright reform could shape the future of AI-generated music. One approach might involve granting copyright to the user who prompts the AI, recognizing them as the “author” of the final product. This approach might incentivize innovation, but it could also lead to disputes over the extent of the user’s creative contribution. Alternatively, a new category of copyright could be created specifically for AI-generated works, perhaps with a shorter protection period or different licensing requirements. This could strike a balance between protecting creators and fostering innovation. Another possibility is to focus on the data used to train the AI, focusing on the potential for copyright infringement within the training dataset itself. This approach focuses on the source material rather than the output. The path forward will likely involve a combination of these approaches, shaped by ongoing legal and ethical debates. The ultimate outcome will significantly impact the future development and widespread adoption of AI music technology.

Final Summary

Source: craiyon.com

The integration of AI into music production and composition is no longer a futuristic fantasy; it’s happening now. While ethical considerations and copyright debates continue to evolve, the undeniable impact of AI on the creative process is reshaping the industry at a rapid pace. From groundbreaking new sounds to hyper-personalized listening experiences, the future of music is being written, one algorithm at a time. And it’s going to be a wild ride.