How Artificial Intelligence is Advancing Cybersecurity Measures? It’s not just a futuristic fantasy; it’s the present reality shaping how we fight cybercrime. AI is rapidly transforming cybersecurity, moving beyond reactive measures to proactive defense. From identifying threats in real-time to automating complex security tasks, AI is revolutionizing the way we protect our digital world, making it smarter, faster, and more efficient.

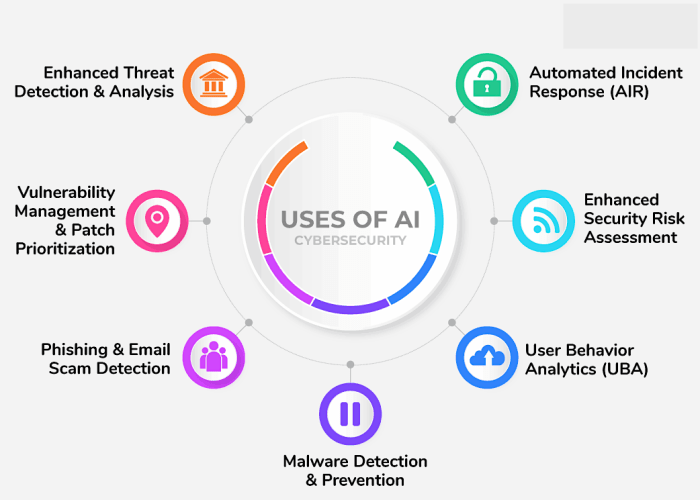

This means less reliance on outdated methods and a significant boost in our ability to stay ahead of the ever-evolving threat landscape. We’re talking about AI-powered threat detection that pinpoints malicious activity with laser precision, AI-driven vulnerability management that proactively identifies weaknesses before they’re exploited, and AI-enhanced SIEM systems that analyze massive amounts of data to detect and respond to incidents with lightning speed. This isn’t just about improving security; it’s about fundamentally changing the game.

AI-Powered Threat Detection

Cybersecurity is a constant arms race, with attackers constantly evolving their tactics. Traditional security methods, while valuable, often struggle to keep pace. This is where Artificial Intelligence (AI) steps in, offering a powerful new arsenal in the fight against cyber threats. AI-powered threat detection leverages the power of machine learning to identify and respond to threats in real-time, significantly enhancing overall security posture.

AI algorithms analyze vast amounts of data – network traffic, system logs, user behavior – to identify patterns indicative of malicious activity. This proactive approach allows for the detection of threats that might otherwise go unnoticed by traditional rule-based systems.

Machine Learning Algorithms for Real-Time Threat Identification and Classification

Machine learning algorithms are the heart of AI-powered threat detection. These algorithms learn from historical data to identify patterns and anomalies that signal malicious behavior. This learning process allows the system to adapt to new and evolving threats, a key advantage over static rule-based systems. For example, an algorithm might learn to identify a phishing attempt by analyzing the sender’s email address, the email content, and the recipient’s behavior. Real-time analysis is crucial, enabling immediate response and mitigation of threats before they can cause significant damage.

Types of AI Algorithms Used for Threat Detection

Several AI algorithms contribute to robust threat detection. Anomaly detection algorithms identify deviations from established baselines in network traffic or system behavior. For instance, an unusual spike in data transfer to an external server could trigger an alert. Signature-based detection, a more traditional method enhanced by AI, uses machine learning to identify known malicious signatures within data streams, significantly improving efficiency and accuracy compared to purely rule-based signature matching. Other advanced techniques, like deep learning, can analyze complex relationships within data to uncover hidden threats.

AI-Powered Security Tools and Threat Intelligence Feeds

Many modern security tools leverage AI and incorporate threat intelligence feeds to enhance their detection capabilities. These feeds provide up-to-date information on known threats, vulnerabilities, and attack techniques. AI algorithms then use this intelligence to improve their ability to identify and classify threats. For example, a security information and event management (SIEM) system integrated with an AI engine and threat intelligence feeds can automatically prioritize alerts based on the severity and likelihood of an attack. This allows security teams to focus on the most critical threats. Another example would be an endpoint detection and response (EDR) solution using machine learning to detect malicious behavior on individual endpoints, even if that behavior is novel or hasn’t been previously identified.

Comparison of Traditional and AI-Driven Threat Detection

| Feature | Traditional Security Methods | AI-Driven Threat Detection |

|---|---|---|

| Threat Identification | Signature-based, rule-based; relies on known threats. | Anomaly detection, machine learning; identifies known and unknown threats. |

| Response Time | Often reactive; alerts generated after an attack has begun. | Proactive and real-time; alerts generated as threats emerge. |

| Scalability | Can be challenging to scale to handle large volumes of data. | Highly scalable; can handle massive datasets and growing threat landscapes. |

| Accuracy | High false positive rate; many alerts may be irrelevant. | Improved accuracy; reduced false positives through machine learning. |

AI in Vulnerability Management

Source: vtechsolution.com

The digital landscape is a minefield of potential security breaches, and traditional methods of vulnerability management are struggling to keep pace. Enter artificial intelligence, offering a powerful new arsenal in the fight against cyber threats. AI’s ability to analyze vast datasets, identify patterns, and learn from experience makes it uniquely suited to tackling the complexities of vulnerability management, offering faster, more accurate, and proactive solutions.

AI significantly enhances vulnerability management by automating previously manual and time-consuming tasks, allowing security teams to focus on more strategic initiatives. This automation isn’t just about efficiency; it’s about drastically improving the accuracy and effectiveness of vulnerability identification and remediation, leading to a stronger overall security posture.

AI’s Role in Identifying and Prioritizing Software Vulnerabilities

AI algorithms excel at sifting through mountains of code and system data to pinpoint weaknesses that might otherwise be missed by human analysts. Machine learning models can be trained on massive datasets of known vulnerabilities, enabling them to identify similar patterns and potential exploits in new software. Furthermore, AI can prioritize vulnerabilities based on their severity, potential impact, and exploitability, allowing security teams to focus their resources on the most critical threats first. This prioritization dramatically improves efficiency and reduces the overall risk. For instance, an AI system might flag a critical vulnerability in a widely used application as higher priority than a low-severity vulnerability in an internal tool used by only a few employees.

Automating Vulnerability Scanning and Penetration Testing

AI automates the tedious and often repetitive processes of vulnerability scanning and penetration testing. Instead of relying on manual scans that can take days or weeks, AI-powered tools can perform comprehensive scans in a fraction of the time. These tools use machine learning to identify potential vulnerabilities, simulate attacks, and analyze the results, providing a much more thorough and efficient assessment of an organization’s security posture. Imagine an AI system autonomously scanning a network, identifying a zero-day exploit, and automatically patching the vulnerability before malicious actors can take advantage of it.

Examples of AI-Powered Tools Predicting Potential Vulnerabilities

Several companies offer AI-powered vulnerability management tools. For example, some platforms use machine learning to analyze code repositories, identifying potential vulnerabilities even before the software is deployed. These tools often integrate with existing development workflows, providing developers with immediate feedback and enabling them to address vulnerabilities early in the development lifecycle. Another example is AI-driven systems that predict the likelihood of a specific vulnerability being exploited based on factors such as its severity, the prevalence of similar exploits, and the organization’s security posture. This predictive capability allows organizations to proactively address vulnerabilities before they become a significant threat. This proactive approach, driven by AI predictions, significantly reduces the chances of a successful attack.

Steps in an AI-Driven Vulnerability Management Workflow

The implementation of AI in vulnerability management typically follows a structured workflow. Effective use requires a carefully planned and executed process.

- Data Collection and Preparation: Gathering relevant data from various sources, including code repositories, system logs, and vulnerability databases, and cleaning and preparing this data for AI analysis.

- Vulnerability Identification: Using AI algorithms (like machine learning and deep learning) to analyze the data and identify potential vulnerabilities based on patterns and anomalies.

- Vulnerability Prioritization: Ranking identified vulnerabilities based on their severity, potential impact, and exploitability, using AI-driven risk scoring models.

- Vulnerability Remediation: Automating the process of patching or mitigating identified vulnerabilities, potentially integrating with existing IT systems and workflows.

- Continuous Monitoring and Improvement: Continuously monitoring the system for new vulnerabilities, updating AI models with new data, and refining the vulnerability management process based on feedback and results.

AI for Security Information and Event Management (SIEM)

Source: fortinet.com

SIEM systems are the unsung heroes of cybersecurity, tirelessly churning through mountains of security logs to identify potential threats. But traditional SIEMs often struggle to keep up with the sheer volume and complexity of modern data, leading to alert fatigue and missed incidents. Enter AI, the superhero sidekick that dramatically boosts SIEM capabilities, turning a good system into a truly exceptional one.

AI significantly enhances SIEM systems by automating and accelerating the analysis of security logs from diverse sources, enabling faster and more accurate threat detection. Instead of relying solely on predefined rules, AI algorithms can learn patterns and anomalies indicative of malicious activity, providing a proactive defense against evolving threats. This allows security teams to focus on critical incidents, rather than getting bogged down in false alarms.

AI-Enhanced Log Analysis and Incident Detection

AI algorithms, particularly machine learning models, are trained on vast datasets of security logs, learning to distinguish between benign and malicious events. These models can identify subtle patterns and correlations that would be impossible for human analysts to spot, leading to earlier detection of sophisticated attacks. For example, an AI-powered SIEM might detect a series of seemingly innocuous login attempts from geographically dispersed locations, eventually recognizing this as a coordinated brute-force attack. This level of detail and pattern recognition significantly improves the accuracy and timeliness of incident detection.

Benefits of AI-Driven Correlation and Analysis of Security Events

The ability of AI to correlate security events across various sources is a game-changer. Traditional SIEMs often struggle to integrate and analyze data from disparate systems, leading to fragmented views of security posture. AI, however, can seamlessly integrate data from firewalls, intrusion detection systems, endpoint security tools, and cloud security platforms, providing a holistic view of the organization’s security landscape. This comprehensive analysis allows for more accurate threat assessment and faster response times. For instance, AI could correlate a suspicious email attachment with unusual network activity and a compromised user account, instantly painting a clear picture of a potential phishing attack.

Reducing False Positives in SIEM Alerts

One of the biggest challenges with traditional SIEMs is the high number of false positives. These alerts, while seemingly significant, often represent benign events, leading to alert fatigue and decreased responsiveness to genuine threats. AI significantly reduces this problem by applying machine learning models to filter out noise and prioritize alerts based on their likelihood of being actual threats. This is achieved through sophisticated anomaly detection and pattern recognition, allowing security teams to focus their efforts on the most critical incidents. For example, an AI-powered SIEM might learn to ignore alerts triggered by specific applications known to generate frequent, but harmless, log entries.

AI-Driven Incident Response within a SIEM System

A flowchart illustrating the process:

[Imagine a flowchart here. The flowchart would begin with “Security Event Detected” leading to “AI-powered Anomaly Detection,” then branching to “Benign Event (Discarded)” and “Malicious Event (Investigated).” The “Malicious Event (Investigated)” branch would lead to “Threat Classification,” then “Automated Response (if applicable),” followed by “Security Team Notification” and finally “Incident Remediation.” A feedback loop would connect “Incident Remediation” back to “AI-powered Anomaly Detection” to improve future detection accuracy.] The flowchart visually represents how AI streamlines incident response by automating threat classification, prioritizing alerts, and even triggering automated responses in certain cases, significantly reducing the time and resources required to handle security incidents.

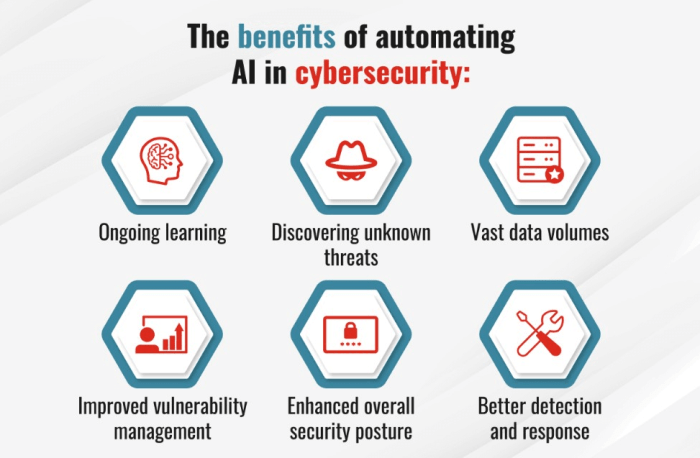

AI-Driven Security Automation

The sheer volume of data generated in today’s digital landscape makes manual cybersecurity management practically impossible. Enter AI-driven security automation, a game-changer that leverages artificial intelligence to streamline and enhance security operations. By automating repetitive tasks and analyzing vast datasets, AI empowers security teams to focus on more strategic and complex threats, ultimately strengthening an organization’s overall security posture. This section will delve into the applications, benefits, and examples of AI in automating critical security functions.

AI Automation in Patching

AI significantly improves the patching process, a crucial aspect of vulnerability management often hampered by manual efforts. AI algorithms can identify vulnerabilities in software and systems, prioritize patches based on risk level, and even automate the deployment of patches across an organization’s infrastructure. This reduces the window of vulnerability, minimizing the risk of exploitation. For example, AI-powered patching systems can analyze software updates, identify critical patches, and schedule their deployment during off-peak hours to minimize disruption to business operations. This automated approach is significantly faster and more efficient than manual patching, which is often slow, prone to errors, and struggles to keep pace with the constant influx of new vulnerabilities.

AI in Automated Incident Response

Incident response is a time-sensitive process demanding immediate attention and rapid action. AI can drastically improve the efficiency and effectiveness of incident response by automating several key steps. AI-powered security information and event management (SIEM) systems can automatically detect and classify security incidents, triggering automated responses such as isolating infected systems or blocking malicious traffic. This automation reduces the time it takes to contain a breach, minimizing potential damage. Consider a scenario where a Distributed Denial of Service (DDoS) attack is detected. An AI-powered system can automatically scale up network resources to mitigate the attack, simultaneously initiating an investigation to identify the source and prevent future occurrences. This level of automation is impossible to achieve through manual processes alone.

AI in Automating User Access Management, How Artificial Intelligence is Advancing Cybersecurity Measures

User access management (UAM) is another area where AI significantly improves efficiency and security. AI algorithms can analyze user behavior patterns to identify anomalies and potential security threats. For example, an AI system can detect unusual login attempts from unfamiliar locations or devices, triggering an alert or automatically blocking access. Furthermore, AI can automate the provisioning and de-provisioning of user accounts, ensuring that employees only have access to the resources they need, minimizing the risk of insider threats. This continuous monitoring and automated control significantly reduces the administrative burden associated with UAM while enhancing overall security.

Comparison of AI-Driven Security Automation Tools

The following table compares some key features of different AI-driven security automation tools. Note that specific functionalities and pricing can vary depending on the vendor and chosen package.

| Tool | Patching Capabilities | Incident Response Features | User Access Management |

|---|---|---|---|

| Tool A | Automated vulnerability scanning and patch deployment scheduling | Automated threat detection, incident classification, and response | Automated user provisioning and de-provisioning, anomaly detection |

| Tool B | Prioritized patching based on risk assessment, automated patch deployment | Real-time threat detection, automated containment and remediation | Behavioral analytics for threat detection, access control optimization |

| Tool C | Vulnerability assessment and remediation recommendations, patch deployment automation | Automated incident investigation, reporting, and forensics | Role-based access control (RBAC), access review automation |

| Tool D | Automated patch testing and deployment, vulnerability management dashboards | AI-powered threat hunting, automated incident response workflows | Adaptive authentication based on user behavior, privileged access management |

AI in Cybersecurity Training and Education

The cybersecurity landscape is constantly evolving, making it crucial for professionals to stay ahead of emerging threats. Traditional training methods often struggle to keep pace with this rapid change. AI offers a powerful solution, providing dynamic and adaptive learning experiences that better prepare individuals for the complexities of modern cybersecurity. This allows for a more effective and engaging learning process, ultimately strengthening the overall security posture of organizations.

AI can revolutionize cybersecurity training and education by creating immersive and realistic simulations that mimic real-world scenarios. This surpasses static, textbook-based learning, fostering a deeper understanding of threats and responses. Furthermore, AI-powered platforms can personalize the learning journey, adapting to individual strengths and weaknesses, ensuring that each learner receives targeted instruction.

AI-Powered Cybersecurity Training Simulations

AI enables the creation of realistic and adaptive cybersecurity training simulations. These simulations go beyond simple scenarios, offering dynamic environments that react to trainee actions in real-time. For example, a simulation might present a phishing email; if the trainee clicks, the simulation could progress to show the consequences, such as malware infection or data breach. The difficulty and complexity of these simulations can adjust based on the trainee’s performance, providing a continuously challenging and relevant learning experience. This adaptive nature ensures trainees are constantly pushed to improve their skills, addressing specific weaknesses and building confidence in their abilities.

Benefits of AI-Powered Cybersecurity Training Platforms

AI-powered training platforms offer several significant advantages. They provide personalized learning paths tailored to individual needs and learning styles. This targeted approach maximizes learning efficiency and retention. Furthermore, AI can automate the grading and feedback process, freeing up instructors’ time and providing trainees with immediate insights into their performance. This rapid feedback loop enhances learning and helps identify areas needing further attention. The ability to track progress and identify knowledge gaps allows for more effective curriculum development and ensures that training remains relevant and up-to-date.

AI-Based Tools for Assessing Cybersecurity Knowledge and Skills

Several AI-based tools are available to assess cybersecurity knowledge and skills. These tools often use a combination of techniques, such as automated essay scoring, multiple-choice questions with adaptive difficulty, and practical exercises within simulated environments. For instance, a platform might present a series of coding challenges requiring the trainee to identify and fix vulnerabilities in sample code. The AI system analyzes the trainee’s approach, identifying strengths and weaknesses in their problem-solving skills. These assessments provide a comprehensive evaluation of a trainee’s capabilities, going beyond simple knowledge recall to assess practical application skills.

Realistic AI-Powered Cybersecurity Training Scenario

Imagine a trainee participating in an AI-powered simulation designed to test their incident response skills. The scenario begins with an alert indicating a potential ransomware attack on a simulated company network. The trainee must navigate a virtual network, identify the infected systems, contain the spread of the malware, and ultimately restore data from backups. The simulation dynamically responds to the trainee’s actions. If the trainee makes an incorrect decision, the simulation might escalate the attack, presenting new challenges and consequences. If the trainee successfully neutralizes the threat, the simulation might introduce a new, more sophisticated attack vector. This continuous adaptation keeps the trainee engaged and ensures that their skills are tested under pressure. Throughout the exercise, the AI system provides real-time feedback, highlighting both successful actions and areas for improvement. The simulation concludes with a detailed report summarizing the trainee’s performance, including specific areas of strength and weakness. This comprehensive feedback helps the trainee refine their skills and better prepare for real-world incidents.

Ethical Considerations of AI in Cybersecurity: How Artificial Intelligence Is Advancing Cybersecurity Measures

Source: memcyco.com

AI’s boosting cybersecurity by predicting and preventing threats in real-time, a game-changer for digital defense. This proactive approach extends beyond the digital realm; consider how training for real-world crises benefits from immersive tech, like the advancements detailed in The Role of Virtual Reality in Simulating Emergency Response Scenarios. Similarly, AI’s predictive capabilities can help optimize emergency response planning, creating a safer world both online and off.

The rapid integration of artificial intelligence (AI) into cybersecurity presents a double-edged sword. While AI offers powerful tools to combat evolving threats, it also introduces a new layer of ethical complexities that demand careful consideration. Failing to address these ethical concerns could lead to unintended consequences, undermining the very security AI is meant to enhance.

AI-powered security systems, while impressive, aren’t immune to the biases present in the data they’re trained on. This can lead to discriminatory outcomes, such as unfairly targeting certain user groups or overlooking genuine threats from less-represented sources. Furthermore, the “black box” nature of some AI algorithms makes it difficult to understand their decision-making processes, hindering accountability and trust.

AI Bias and Limitations in Security Systems

The accuracy and fairness of AI-powered security systems are directly tied to the quality and representativeness of the data used for training. If the training data reflects existing societal biases, the AI system will likely perpetuate and even amplify those biases in its security assessments. For example, an AI system trained primarily on data from one geographical region might be less effective at identifying threats originating from other regions, leading to security vulnerabilities. The lack of transparency in how some AI algorithms reach their conclusions further complicates this issue, making it challenging to identify and correct biased outcomes. This opacity can also hinder efforts to improve the system’s fairness and accuracy.

Risks Associated with AI in Cybersecurity

The use of AI in cybersecurity introduces several significant risks. Adversarial attacks, where malicious actors deliberately manipulate AI systems to circumvent security measures, pose a serious threat. For instance, attackers could craft malware designed to evade AI-based detection systems by exploiting their vulnerabilities or biases. Data privacy is another major concern. AI systems often require access to large datasets of sensitive information to function effectively. This raises concerns about the potential for unauthorized access, misuse, or breaches of confidential data. The potential for misuse of AI-powered surveillance technologies, leading to unwarranted intrusion on privacy, is also a significant ethical challenge.

Ethical Dilemmas in AI Cybersecurity Deployment

Deploying AI in cybersecurity raises several ethical dilemmas. One key challenge is balancing the need for enhanced security with the potential infringement on individual privacy. For example, the use of AI-powered surveillance systems to prevent cyberattacks might necessitate the collection and analysis of personal data, raising concerns about potential abuses of power. Another dilemma involves the allocation of responsibility when AI systems make mistakes. Determining who is accountable when an AI-powered security system fails to detect a threat or incorrectly flags a legitimate activity can be challenging. This is further complicated by the lack of transparency in some AI algorithms, making it difficult to understand the reasons behind their decisions. The potential for autonomous weapons systems, which could make life-or-death decisions without human intervention, raises even more profound ethical concerns.

Guidelines for Ethical AI in Cybersecurity

To mitigate the ethical risks associated with AI in cybersecurity, a comprehensive set of guidelines is crucial. These guidelines should prioritize transparency and explainability in AI algorithms, ensuring that the decision-making processes of AI systems are understandable and auditable. Data privacy should be paramount, with robust mechanisms in place to protect sensitive information used for training and operation of AI systems. Regular audits and assessments should be conducted to identify and address potential biases in AI systems, ensuring fairness and equity in their application. Finally, establishing clear lines of accountability for the actions of AI systems is essential to promote responsible development and deployment. These guidelines should be developed collaboratively, involving experts from various fields, including cybersecurity, AI ethics, and law, to ensure a comprehensive and effective approach. The ultimate goal should be to harness the power of AI for improved cybersecurity while safeguarding ethical principles and fundamental human rights.

AI and the Future of Cybersecurity

The integration of artificial intelligence (AI) is no longer a futuristic concept in cybersecurity; it’s rapidly becoming the backbone of robust defense systems. As cyber threats grow in sophistication and volume, AI offers a crucial advantage, enabling proactive defense and automated responses that simply weren’t possible before. This section explores emerging trends and predicts the transformative impact AI will have on the cybersecurity landscape and its professionals.

AI’s role in cybersecurity is evolving at an unprecedented pace. We’re moving beyond basic threat detection to a world of predictive analysis, automated incident response, and even AI-driven vulnerability discovery. This shift promises a more proactive and efficient approach to security, allowing organizations to stay ahead of the curve in the ever-evolving threat landscape.

Emerging Trends in AI-Driven Cybersecurity Solutions

The field of AI-driven cybersecurity is constantly innovating. We’re seeing a surge in solutions that leverage machine learning (ML) and deep learning (DL) for more accurate and nuanced threat detection. For example, advancements in natural language processing (NLP) are allowing AI systems to analyze large volumes of unstructured data, such as log files and security alerts, to identify subtle patterns indicative of malicious activity. Furthermore, the development of explainable AI (XAI) is crucial; it allows security professionals to understand the reasoning behind AI-driven decisions, increasing trust and transparency. Another key trend is the rise of AI-powered security orchestration, automation, and response (SOAR) platforms, which automate repetitive tasks and streamline incident handling. This allows security teams to focus on more strategic initiatives.

Predictions About the Future Impact of AI on the Cybersecurity Landscape

Experts predict that AI will become increasingly crucial in preventing and mitigating sophisticated cyberattacks. The volume and complexity of cyber threats are expected to increase exponentially in the coming years. AI will be vital in analyzing this deluge of data to identify threats in real-time, significantly reducing response times. We can also expect AI to play a more prominent role in proactive security measures, predicting potential vulnerabilities before they’re exploited. For example, AI could analyze codebases to identify potential weaknesses and suggest mitigation strategies before an attacker discovers them. This proactive approach is a significant shift from the largely reactive strategies of the past. Imagine a future where AI-powered systems automatically patch vulnerabilities as soon as they are identified, significantly reducing the window of opportunity for attackers.

AI’s Impact on the Roles and Responsibilities of Cybersecurity Professionals

The integration of AI will significantly reshape the roles and responsibilities of cybersecurity professionals. While AI will automate many routine tasks, it won’t replace human expertise. Instead, it will augment human capabilities, freeing up security professionals to focus on more complex and strategic challenges. Security professionals will need to develop new skillsets, such as understanding and managing AI-powered security systems, interpreting AI-generated insights, and developing AI-driven security strategies. The demand for professionals skilled in AI and cybersecurity will undoubtedly increase, creating new career opportunities. For instance, roles like AI security engineers and AI ethics specialists will become increasingly important. The future cybersecurity workforce will need a blend of technical expertise and a deep understanding of AI’s capabilities and limitations.

A Visual Representation of the Future of Cybersecurity with AI Integration

Imagine a dynamic, interconnected network representing a company’s digital infrastructure. This network is constantly monitored by a sophisticated AI system, visualized as a central hub glowing with various colors representing different security levels. Thin, brightly lit lines represent secure data flows, while thicker, darker lines represent potential threats identified by the AI. The AI system automatically isolates threats, shown as darkened sections of the network that are being quarantined, and simultaneously alerts human security analysts, represented by smaller, interconnected nodes working collaboratively to assess the situation and strategize a response. The analysts use AI-generated reports and visualizations to quickly understand the threat’s nature and impact, enabling a rapid and effective response. The entire system operates in real-time, adapting and learning from each incident to improve its threat detection and response capabilities. This visual representation showcases a future where AI and human expertise work synergistically to maintain a robust and adaptable cybersecurity posture.

Final Review

In short, AI is no longer a futuristic concept in cybersecurity; it’s the present and the future. As AI technology continues to evolve, its role in safeguarding our digital assets will only become more critical. The integration of AI in cybersecurity isn’t just about enhancing existing systems; it’s about creating a fundamentally more secure digital ecosystem. By embracing AI’s potential, we can build a future where cyber threats are met with intelligent, proactive defenses, leaving malicious actors struggling to keep up.