The Impact of Machine Learning on Scientific Research and Discovery is nothing short of revolutionary. Forget painstaking manual analysis; we’re talking algorithms crunching colossal datasets, uncovering hidden patterns that would leave even the most seasoned scientists scratching their heads. This isn’t just about speeding things up; it’s about unlocking entirely new avenues of research, generating hypotheses we never even knew were possible, and ultimately, accelerating the pace of scientific progress at a scale previously unimaginable.

From predicting protein folding to designing more effective drugs, machine learning is reshaping how we approach scientific inquiry. It’s not replacing human ingenuity, but augmenting it, providing researchers with powerful tools to tackle complex problems and push the boundaries of human knowledge. This exploration will delve into the specifics, examining both the incredible potential and the inherent challenges of this rapidly evolving field.

Accelerated Data Analysis

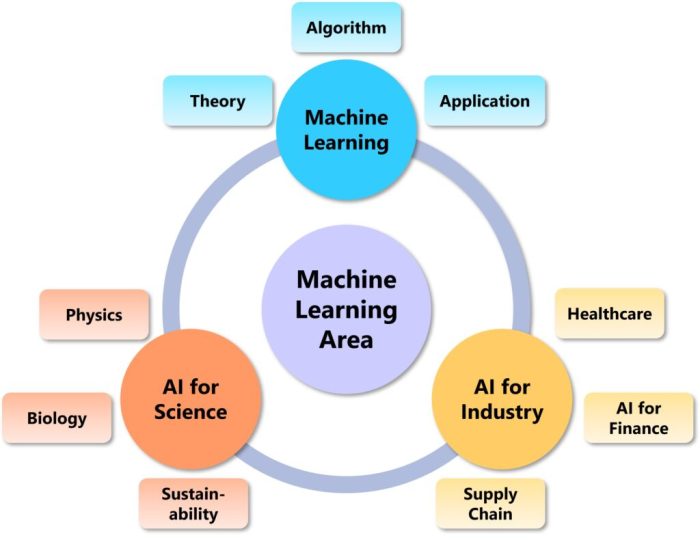

Machine learning (ML) is revolutionizing scientific research by dramatically speeding up the analysis of massive datasets. Traditional methods often struggle to keep pace with the ever-increasing volume of data generated in fields like genomics, astronomy, and climate science. ML algorithms, however, are designed to handle this complexity, offering unprecedented speed and scalability in uncovering hidden patterns and insights.

The sheer volume of data generated in modern scientific research often overwhelms traditional analytical techniques. These techniques, typically relying on manual data processing and simpler statistical models, become computationally expensive and time-consuming when faced with datasets containing millions or billions of data points. This limitation significantly hinders the pace of scientific discovery. In contrast, machine learning algorithms, particularly those based on deep learning, are specifically designed to efficiently process and analyze massive datasets, identifying complex relationships that might otherwise remain hidden.

Comparison of Traditional and Machine Learning Data Analysis Methods

The table below illustrates the key differences between traditional data analysis methods and machine learning approaches in terms of speed, scalability, and typical applications.

| Method | Speed | Scalability | Application Examples |

|---|---|---|---|

| Traditional Statistical Analysis (e.g., ANOVA, t-tests) | Slow, especially for large datasets | Limited; performance degrades significantly with increasing data size | Analyzing small-scale experiments, basic data summaries |

| Linear Regression | Relatively fast for smaller datasets, but slows down with increased complexity and size | Moderate; can handle moderately large datasets, but performance can be affected by dimensionality | Modeling linear relationships between variables, prediction with simple models |

| Machine Learning (e.g., Random Forest, Support Vector Machines) | Faster than traditional methods for large datasets | High; can handle extremely large datasets with relatively good performance | Image recognition in medical imaging, genomic analysis, climate modeling |

| Deep Learning (e.g., Convolutional Neural Networks, Recurrent Neural Networks) | Very fast, even for massive datasets | Very high; designed to handle extremely large and complex datasets | Drug discovery, analysis of astronomical images, natural language processing in scientific literature |

Scientific Breakthroughs Enabled by Accelerated Data Analysis

The rapid analysis of large datasets using machine learning has already led to several significant scientific breakthroughs. For example, in genomics, ML algorithms have been instrumental in identifying genetic markers associated with diseases like cancer, enabling earlier diagnosis and more targeted therapies. In astronomy, machine learning has accelerated the analysis of astronomical surveys, leading to the discovery of new planets and galaxies. In materials science, ML is being used to predict the properties of new materials, accelerating the development of advanced materials with specific functionalities. The Human Genome Project, for instance, while a monumental achievement, would have been significantly delayed without the later application of ML techniques to analyze the resulting massive genomic data. The rapid identification of COVID-19’s genetic sequence and subsequent vaccine development were also significantly aided by the application of machine learning algorithms to analyze genomic data and predict protein structures.

Enhanced Discovery and Hypothesis Generation

Machine learning is revolutionizing scientific research by moving beyond mere data analysis to actively participate in the discovery process itself. It’s no longer just about crunching numbers; it’s about uncovering hidden patterns and generating novel hypotheses that would likely escape human notice, accelerating the pace of scientific breakthroughs. This enhanced discovery power stems from machine learning’s ability to sift through massive datasets, identifying subtle correlations and relationships that are too complex for human researchers to discern manually.

Machine learning algorithms excel at finding patterns and correlations in complex datasets, leading to the formulation of novel hypotheses. This capability is particularly impactful in fields overwhelmed by data, such as genomics, astronomy, and materials science. By identifying unexpected connections, these algorithms effectively act as a powerful hypothesis-generating engine, pointing researchers towards promising avenues of investigation that might otherwise remain unexplored.

Machine Learning Algorithms for Hypothesis Generation

The application of machine learning in hypothesis generation spans various scientific domains. For instance, in drug discovery, algorithms like support vector machines (SVMs) and random forests are used to predict the efficacy of potential drug candidates based on their molecular structure and properties. This significantly reduces the time and cost associated with traditional trial-and-error methods. In materials science, machine learning models are employed to predict the properties of new materials, guiding the design and synthesis of materials with desired characteristics, such as high strength or conductivity. Astronomers utilize machine learning to analyze astronomical images, identifying patterns and anomalies that could indicate the presence of exoplanets or other celestial phenomena. For example, convolutional neural networks (CNNs) are particularly effective at identifying subtle variations in light intensity that might signify a planet transiting a star.

Machine Learning’s Role in Research Design

Beyond hypothesis generation, machine learning is also transforming the way scientific research is designed and conducted. By analyzing existing data, machine learning algorithms can help researchers formulate more focused and efficient research questions. For example, a machine learning model might identify specific variables that are most strongly correlated with a particular outcome, allowing researchers to design experiments that directly test the relationship between these variables. Furthermore, machine learning can be used to optimize experimental designs, minimizing the number of experiments needed to obtain statistically significant results. This is particularly important in fields where experiments are expensive or time-consuming, such as clinical trials or materials synthesis. Imagine a scenario where a machine learning algorithm analyzes prior clinical trial data and identifies the optimal dosage and treatment duration for a new drug, reducing the need for extensive and costly human trials. This efficiency gain translates to faster progress in scientific discovery.

Improved Experiment Design and Optimization

Machine learning is revolutionizing scientific research not just by analyzing data, but by actively shaping the experiments that generate it. By optimizing experimental parameters and designing more efficient experiments, ML allows scientists to extract maximum information with minimal resources, accelerating the pace of discovery and improving the reliability of results. This optimization extends beyond simply speeding things up; it allows researchers to explore complex systems and identify optimal conditions that might be missed using traditional methods.

Machine learning algorithms can analyze historical experimental data to identify patterns and relationships that inform the design of future experiments. This predictive capability allows researchers to focus on the most promising avenues of investigation, avoiding costly and time-consuming dead ends. This proactive approach significantly reduces the trial-and-error aspect of scientific research, leading to faster breakthroughs.

Bayesian Optimization for Experimental Design

Bayesian optimization is a powerful technique used to find the optimal settings for a given objective function. In the context of experimental design, this objective function could represent the desired outcome of an experiment, such as maximizing yield or minimizing error. Bayesian optimization uses a probabilistic model to approximate the objective function, updating its belief about the function’s shape with each new experiment. This iterative process efficiently guides the selection of subsequent experiments, focusing on regions of the parameter space that are most likely to yield improved results. For instance, in materials science, Bayesian optimization has been successfully used to optimize the synthesis parameters for new materials with desired properties, significantly reducing the number of experiments required to achieve optimal performance.

Genetic Algorithms in Experimental Design

Genetic algorithms (GAs) provide another approach to experimental design optimization. Inspired by natural selection, GAs maintain a population of experimental designs, each represented as a “chromosome” encoding the values of experimental parameters. These designs are evaluated based on their performance, and a new generation of designs is created through processes of selection, crossover, and mutation. This evolutionary process iteratively improves the designs over generations, converging towards optimal or near-optimal solutions. A notable application is in drug discovery, where GAs can be used to optimize the chemical structure of drug candidates, leading to more effective and less toxic compounds.

Comparison of Machine Learning Techniques for Experimental Design Optimization, The Impact of Machine Learning on Scientific Research and Discovery

Several machine learning techniques are employed for experimental design optimization, each with its strengths and weaknesses.

- Bayesian Optimization: Excellent for situations with expensive or time-consuming experiments, as it efficiently explores the parameter space. However, it can be computationally demanding for high-dimensional problems.

- Genetic Algorithms: Robust and can handle complex, non-linear relationships between parameters and outcomes. However, they can be less efficient than Bayesian optimization for simpler problems and may require careful parameter tuning.

- Reinforcement Learning: Particularly useful for adaptive experimental design, where the experiment can be dynamically adjusted based on the observed results. This approach is more complex to implement but can lead to highly efficient exploration of the parameter space.

Examples of Improved Accuracy and Reliability

The application of machine learning to experimental design has led to demonstrable improvements in the accuracy and reliability of scientific experiments across diverse fields. For example, in high-throughput drug screening, machine learning algorithms can optimize the experimental conditions to enhance the detection of active compounds, leading to more accurate identification of potential drug candidates. In materials science, the use of machine learning in experimental design has facilitated the discovery of novel materials with enhanced properties, such as high-temperature superconductors, by efficiently exploring a vast parameter space. These examples highlight the transformative potential of machine learning in accelerating scientific discovery and enhancing the quality of research.

Automation of Repetitive Tasks

Source: hoover.org

Scientists, much like artists, often find themselves spending significant portions of their time on tasks that, while necessary, don’t directly contribute to the core creative and analytical aspects of their research. These repetitive tasks, if automated, could free up valuable time and resources, accelerating the pace of scientific discovery. Machine learning offers a powerful toolkit for precisely this purpose.

The automation of repetitive tasks in scientific research is revolutionizing the field, boosting efficiency and allowing researchers to focus on higher-level thinking and problem-solving. This isn’t just about saving time; it’s about fundamentally changing the way research is conducted, enabling larger-scale studies and more complex analyses that were previously impractical.

Machine Learning Tools for Automating Repetitive Tasks

Several machine learning tools and techniques are ideally suited for automating common repetitive tasks in scientific research. The choice of tool often depends on the specific task and the nature of the data involved.

- Data Cleaning: Techniques like anomaly detection algorithms (e.g., Isolation Forest, One-Class SVM) can identify and flag outliers or erroneous data points in large datasets. These algorithms learn the patterns in the “good” data and then identify points that deviate significantly. This automated process significantly reduces the time and effort spent manually cleaning datasets, a task that is notoriously time-consuming and prone to human error.

- Image Processing: Convolutional Neural Networks (CNNs) are exceptionally effective for automating image analysis tasks, such as cell counting in microscopy images, identifying features in satellite imagery, or classifying medical scans. For example, a CNN could be trained to automatically identify cancerous cells in a biopsy image, drastically speeding up diagnosis and potentially improving accuracy.

- Literature Review: Natural Language Processing (NLP) techniques, including topic modeling and text summarization, can automate the process of sifting through vast amounts of scientific literature. Tools can be used to identify relevant papers, extract key findings, and even generate summaries of the current state of knowledge in a particular area. This significantly reduces the time spent on literature reviews, enabling researchers to stay up-to-date with the latest advancements in their field.

Impact on Researcher Productivity and Efficiency

The impact of automating repetitive tasks using machine learning on researcher productivity and the overall efficiency of scientific research is profound. By freeing researchers from tedious manual tasks, automation allows them to focus on more intellectually stimulating aspects of their work, such as designing experiments, analyzing complex data, and formulating new hypotheses. This increased efficiency translates to faster research cycles, quicker dissemination of findings, and ultimately, accelerated progress in various scientific fields. For instance, a study published in Nature showed that the automation of image analysis in a biological experiment reduced the time required for data processing by 75%, allowing the researchers to complete their study significantly faster than they would have otherwise. This is just one example of how machine learning is transforming the landscape of scientific research.

New Scientific Instruments and Methodologies: The Impact Of Machine Learning On Scientific Research And Discovery

Source: github.io

Machine learning’s impact on scientific research is undeniable, accelerating breakthroughs across fields. This is especially crucial in tackling global health crises, as seen by its contribution to vaccine development and epidemiological modeling. For a deeper dive into the broader technological arsenal deployed against pandemics, check out this insightful article on The Role of Technology in Fighting Global Pandemics.

Ultimately, machine learning’s ability to process vast datasets promises to revolutionize future scientific discoveries and pandemic responses.

Machine learning is no longer just a tool for analyzing existing data; it’s actively shaping the very instruments and methodologies scientists use to gather that data. This integration is leading to a new era of scientific discovery, where instruments are smarter, experiments are more efficient, and the potential for breakthroughs is amplified.

Machine learning algorithms are being woven into the fabric of new scientific instruments, enhancing their capabilities and enabling entirely new approaches to research. This isn’t simply about automating data analysis; it’s about building intelligence directly into the instruments themselves, allowing them to adapt, learn, and optimize in real-time. This paradigm shift allows for more sophisticated data acquisition and analysis, leading to more accurate and comprehensive results.

Machine Learning in Instrument Design

The integration of machine learning into scientific instrument design involves using algorithms to optimize instrument parameters, improve signal processing, and even guide the design process itself. For example, machine learning can be used to design more sensitive detectors, improve the resolution of microscopes, or optimize the performance of spectrometers. Consider the development of a new type of mass spectrometer. Instead of relying on pre-programmed settings, the machine learning component would continuously analyze the incoming data, adjusting parameters such as voltage and magnetic field strength to optimize the separation and detection of different ions. This dynamic adjustment leads to increased sensitivity and accuracy, enabling the detection of trace amounts of molecules previously undetectable.

Advanced Imaging Techniques Enabled by Machine Learning

Machine learning is revolutionizing imaging techniques across various scientific disciplines. In medical imaging, for instance, machine learning algorithms are used to enhance image resolution, detect subtle anomalies, and even predict disease progression. In astronomy, machine learning helps astronomers sift through vast amounts of data from telescopes, identifying potential exoplanets or analyzing the composition of distant galaxies. A prime example is the use of deep learning in microscopy, where algorithms can automatically segment cells, identify specific organelles, and quantify their characteristics with remarkable precision, surpassing the capabilities of human analysts. This allows researchers to analyze significantly larger datasets and extract more meaningful information from complex images.

Robotic Experimentation Guided by Machine Learning

The automation of scientific experiments using robotics is significantly enhanced by machine learning. Instead of relying on pre-programmed sequences, robotic systems can now adapt and optimize their actions in real-time based on the data they collect. This is particularly useful in high-throughput screening experiments, where thousands of different conditions need to be tested. Machine learning algorithms can analyze the results of each experiment, predict the outcome of future experiments, and even design new experiments to maximize the efficiency of the process. For example, in drug discovery, robotic systems guided by machine learning algorithms can automate the synthesis and testing of thousands of potential drug candidates, significantly accelerating the drug development process.

Hypothetical Scenario: A Machine Learning-Enhanced Spectrometer

Imagine a novel spectrometer designed for analyzing the chemical composition of planetary atmospheres. This instrument, called the “Adaptive Atmospheric Analyzer” (AAA), incorporates a machine learning algorithm trained on a vast database of spectral data from known planetary atmospheres. The AAA’s unique functionality lies in its ability to autonomously adjust its parameters – wavelength range, integration time, and data acquisition strategy – based on the incoming data. If it detects an unusual spectral signature, the algorithm will dynamically adjust the instrument’s settings to gather more detailed data about that specific signature. The AAA’s capabilities extend beyond simple analysis; it can also predict the presence of specific molecules based on partial spectral information, allowing for more efficient and targeted data acquisition. The machine learning component continuously learns and improves its predictive capabilities, becoming increasingly accurate over time as it analyzes more data. This self-learning aspect makes the AAA an invaluable tool for exploring unknown planetary atmospheres and discovering new molecules.

Challenges and Limitations

The transformative potential of machine learning in science is undeniable, yet its application isn’t without hurdles. Successfully integrating these powerful tools requires careful consideration of inherent biases, ethical implications, and the need for robust interdisciplinary collaboration. Ignoring these challenges risks undermining the reliability and trustworthiness of scientific advancements.

Potential biases embedded within machine learning algorithms pose a significant threat to the validity of scientific findings. These biases can stem from several sources, including biased training data, flawed algorithm design, and even the unconscious biases of the researchers developing and deploying the models. For instance, a machine learning model trained on a dataset predominantly representing one demographic group might produce inaccurate or discriminatory results when applied to other groups. This could lead to flawed conclusions in medical research, for example, if a model trained primarily on data from a specific ethnic group is used to predict disease risk across diverse populations. The resulting misinterpretations could have serious consequences for healthcare and public policy.

Bias in Machine Learning Algorithms and Their Impact on Scientific Reliability

The reliability of scientific findings heavily depends on the objectivity and unbiased nature of the methods used. Machine learning, while powerful, is susceptible to inheriting and amplifying biases present in the data it’s trained on. This can lead to skewed results and inaccurate predictions, ultimately compromising the integrity of scientific research. Consider a scenario where a machine learning model is used to analyze astronomical data to identify potential exoplanets. If the training data contains biases related to the types of stars observed or the methods used for data collection, the model might incorrectly classify certain celestial objects, leading to false positives or false negatives in the identification of exoplanets. The impact of such biases extends beyond simply inaccurate results; it can lead to the misallocation of resources and the pursuit of unproductive research avenues.

Ethical Considerations in Machine Learning for Scientific Research

The ethical implications of using machine learning in scientific research are multifaceted and demand careful consideration. Data privacy is a paramount concern, particularly when dealing with sensitive information like patient medical records or genomic data. Ensuring the anonymity and security of this data is crucial to maintain ethical standards and comply with relevant regulations. Moreover, intellectual property rights related to algorithms, datasets, and the resulting scientific discoveries must be addressed transparently and fairly. The question of ownership and access to these resources is vital, especially when collaborations involve multiple institutions and researchers. Failing to address these ethical concerns can lead to legal challenges, reputational damage, and a loss of public trust in scientific research.

Interdisciplinary Collaboration in Machine Learning for Scientific Research

Successfully navigating the challenges of implementing machine learning in scientific research necessitates close collaboration between scientists and computer scientists. Scientists bring their domain expertise and understanding of the research questions, while computer scientists provide the technical skills and knowledge to develop and deploy appropriate machine learning models. This interdisciplinary approach ensures that the models are not only technically sound but also relevant and appropriate for the specific scientific context. Effective communication and mutual understanding are crucial for this collaboration to succeed. A joint effort allows for the development of robust, reliable, and ethically sound machine learning solutions, ultimately accelerating scientific discovery while mitigating potential risks.

Ultimate Conclusion

Source: microsoft.com

The integration of machine learning into scientific research is undeniably transforming how we explore the universe, from the smallest molecules to the largest galaxies. While challenges remain—ethical considerations, potential biases, and the need for interdisciplinary collaboration—the potential rewards are too significant to ignore. The future of scientific discovery is intertwined with the power of machine learning, promising a future where breakthroughs happen faster, smarter, and more efficiently than ever before. Get ready for a scientific revolution, powered by algorithms.