Edge computing: the next big thing in internet speed? The Potential of Edge Computing in Enhancing Internet Speed is no longer a futuristic fantasy. It’s rapidly changing how we experience the online world, bringing processing power closer to users and dramatically reducing the lag we all hate. Think lightning-fast streaming, seamless gaming, and ultra-responsive apps – all thanks to this innovative technology that’s moving data processing from distant servers to the edge of the network.

This shift fundamentally alters how data travels, significantly impacting latency. Instead of data trekking across continents to a distant cloud server, it’s processed locally, resulting in a speed boost that’s tangible and transformative. We’ll delve into the specifics of how this works, exploring the tech behind it, the challenges faced, and the exciting future of edge computing in making the internet faster for everyone.

Defining Edge Computing and its Relevance to Internet Speed

Forget waiting forever for your video to buffer – edge computing is changing the game. It’s all about bringing data processing closer to the user, drastically reducing the distance information needs to travel and, consequently, speeding up your internet experience. Think of it as setting up mini-data centers closer to where you are, rather than relying solely on giant, distant cloud servers.

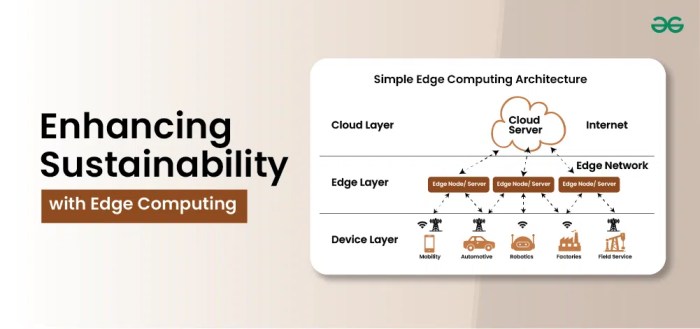

Edge computing fundamentally shifts the location of data processing. Instead of sending all your data to a centralized cloud server (often thousands of miles away), edge computing processes it closer to the source – at the “edge” of the network. This could be a local server, a router, or even a device itself. This architectural shift is key to its impact on internet speed.

Edge Computing Architecture and Principles

Edge computing relies on a distributed network architecture. Data is processed at the edge, often using smaller, specialized servers or gateways strategically placed near users or data sources. This minimizes the reliance on long-distance data transmission to remote cloud servers. Key principles include proximity to data sources, reduced latency, and efficient bandwidth utilization. Imagine it like having a local library instead of always needing to travel to a distant national archive for information.

Edge Computing vs. Cloud Computing: A Location-Based Comparison

The core difference between edge and cloud computing lies in where data is processed. Cloud computing centralizes data processing in large, remote data centers. This approach is efficient for large-scale data storage and processing, but latency increases with distance. Edge computing, on the other hand, distributes data processing closer to the source, reducing latency and improving response times. Think of it as the difference between ordering a pizza from a restaurant across town versus getting it delivered from a kitchen in the next building.

Latency Comparison: Cloud vs. Edge

The latency experienced in cloud-based applications is typically higher than in edge-based applications. This is because data needs to travel longer distances to reach and return from the central cloud servers. For example, streaming a video from a cloud server located across the country might result in noticeable buffering, whereas an edge-based solution could stream the same video seamlessly. The difference can be significant, particularly for applications requiring real-time responsiveness, such as gaming or augmented reality.

Real-World Applications of Edge Computing for Speed Enhancement

Edge computing is already making a real difference in various sectors. Autonomous vehicles rely on edge computing to process sensor data in real-time, enabling quick reactions and avoiding accidents. Similarly, smart cities use edge computing to manage traffic flow and optimize resource allocation, resulting in faster and more efficient transportation. In the entertainment industry, edge computing enhances the streaming experience by reducing buffering and improving video quality. These are just a few examples demonstrating how edge computing is actively contributing to a faster and more responsive internet experience.

How Edge Computing Reduces Latency and Improves Speed

Imagine trying to order a pizza from a restaurant miles away. The phone call takes time, the order needs to travel back and forth, and by the time your pizza arrives, it might be cold. Edge computing works similarly, but instead of pizza, it’s data, and instead of miles, it’s the distance your data travels across the internet. By bringing processing power closer to the user, edge computing drastically cuts down on this travel time, leading to a significantly faster internet experience.

Edge computing significantly reduces latency and improves internet speed by minimizing the distance data travels. Traditional cloud computing relies on central data centers often located far from users. This means data needs to journey long distances to be processed and then back to the user, resulting in delays. Edge computing, however, places servers closer to the source of data and the end-user, reducing this travel time and resulting in faster response times.

Proximity to Data Sources and Internet Speed

The closer the server processing your request is to you, the faster the response. Think of it like this: requesting a file from a server on the other side of the world versus requesting it from a server in your city. The latter will obviously be much quicker because the data has a shorter distance to travel. This proximity significantly minimizes the time it takes for data to travel, reducing latency and increasing perceived internet speed. The impact is particularly noticeable in applications requiring real-time responses, such as online gaming, video conferencing, and IoT device management.

The Role of Edge Servers in Reducing Data Travel Distance

Edge servers act as mini data centers strategically located closer to users. Instead of sending all data to a distant central server, requests are handled by these nearby edge servers. This significantly reduces the physical distance data must travel, resulting in lower latency and faster response times. For example, a user streaming a video might receive the data from an edge server located within their city or region, instead of a distant cloud data center. This drastically reduces the time it takes for the video to buffer and play smoothly.

Key Factors Contributing to Latency Reduction Through Edge Computing

Several key factors contribute to the latency reduction achieved through edge computing. These include the reduced distance data travels, the optimized network infrastructure often employed near edge servers, and the reduced load on central data centers. The closer proximity allows for faster data transmission, while the optimized network infrastructure minimizes bottlenecks. The reduced load on central servers also means that those servers can respond more quickly to other requests, further enhancing overall system performance.

Hypothetical Scenario Demonstrating Speed Improvement

Let’s imagine a gamer playing an online game. Without edge computing, their actions (e.g., firing a weapon) must travel to a distant server, be processed, and then the result (e.g., enemy damage) travels back. This round-trip time can be significant, leading to noticeable lag. With edge computing, the game server is located closer to the gamer, significantly reducing the travel time. The result? A smoother, more responsive gaming experience with minimal lag, allowing for faster reaction times and improved gameplay. In this scenario, the reduction in latency might be as much as several hundred milliseconds, a considerable improvement in a fast-paced online game.

Technological Components Enabling Faster Internet via Edge Computing

Edge computing’s promise of faster internet speeds isn’t just hype; it relies on a sophisticated interplay of technologies working in concert. The speed improvements aren’t achieved by a single component, but rather a synergistic effect of several key players, each contributing its unique strengths to the overall performance boost. Let’s dive into the crucial elements.

The Role of 5G and High-Bandwidth Networks

5G and other high-bandwidth networks are the backbone of effective edge computing. Their high speeds and low latency are essential for transmitting data quickly between edge servers and end-users. Imagine trying to build a high-speed race track with a bumpy, potholed road – it simply wouldn’t work. Similarly, edge computing needs the smooth, fast highway that 5G provides to efficiently transfer data. Without the robust infrastructure offered by these networks, the benefits of edge computing would be severely limited. The increased bandwidth allows for the seamless streaming of high-definition video, real-time gaming, and other bandwidth-intensive applications, all hallmarks of a truly fast internet experience. The low latency, a crucial aspect of 5G, minimizes delays, ensuring a responsive and snappy online experience.

Edge Device Functionalities and Speed Enhancement

Edge devices, such as smartphones, IoT sensors, and smart speakers, play a critical role in accelerating internet speed. These devices act as the first point of contact for data processing, reducing the reliance on distant cloud servers. Instead of sending all data to a far-off server for processing and then receiving the processed information back, edge devices can perform initial computations locally, significantly reducing the distance data needs to travel. For example, a smart home security system can process basic motion detection locally on the edge device, sending only relevant alerts to the cloud, thus conserving bandwidth and reducing latency. This local processing capability contributes significantly to faster response times and a more fluid online experience. Furthermore, the proliferation of edge devices equipped with increasingly powerful processors is further fueling this speed enhancement.

Efficient Data Caching Mechanisms at the Edge, The Potential of Edge Computing in Enhancing Internet Speed

Efficient data caching is another key component. Edge servers store frequently accessed data locally, reducing the need to fetch it from distant data centers. Think of it like a well-stocked local library – instead of having to order every book from a distant national library, your local library has many popular titles readily available. This significantly reduces the time it takes to access information. This caching mechanism becomes particularly crucial for applications like video streaming, where repeated requests for the same data segments can be significantly optimized. This approach minimizes delays and buffering, resulting in smoother and faster streaming experiences. Sophisticated algorithms are employed to determine which data to cache, prioritizing frequently accessed content and ensuring efficient use of storage space at the edge.

Comparison of Edge Computing Technologies and Their Impact on Speed

| Technology | Data Processing Location | Latency Reduction | Speed Improvement |

|---|---|---|---|

| Fog Computing | Network edge, closer to end-users than cloud | Significant | High, especially for IoT applications |

| Mobile Edge Computing (MEC) | Mobile network base stations | High | Excellent for mobile applications and real-time services |

| Cloud Computing (for comparison) | Centralized data centers | Low | Lower than edge computing, especially for geographically dispersed users |

| Multi-access Edge Computing (MEC) | Distributed edge nodes across various networks | Very High | High, supporting diverse applications and high user density |

Challenges and Limitations of Edge Computing for Speed Enhancement: The Potential Of Edge Computing In Enhancing Internet Speed

Source: geeksforgeeks.org

Edge computing, while promising lightning-fast internet speeds, isn’t without its hurdles. The journey to a truly edge-powered internet is paved with challenges related to infrastructure limitations, security concerns, and significant cost implications. Let’s delve into these critical aspects.

Bottlenecks and Limitations in Edge Computing Infrastructure

The effectiveness of edge computing hinges on a robust and widespread network of edge servers. However, deploying and maintaining this infrastructure presents significant challenges. Geographic limitations, particularly in sparsely populated areas or regions with underdeveloped infrastructure, can hinder the deployment of edge nodes. Furthermore, ensuring consistent bandwidth and connectivity across all edge locations is crucial for optimal performance, yet this can be expensive and technically demanding. Another key limitation is the capacity of individual edge servers. Handling massive amounts of data processing and storage at the edge requires powerful hardware, which can be costly and may require regular upgrades to keep pace with technological advancements and increasing data volumes. Think of it like building a high-speed highway: you need enough lanes (bandwidth) and well-maintained roads (infrastructure) to handle the traffic (data). A single bottleneck can slow down the entire system.

Security Concerns Related to Data Processing and Storage at the Edge

Distributing data processing and storage closer to the end-users inherently increases the attack surface. The sheer number of edge nodes introduces vulnerabilities that are difficult to manage centrally. Protecting sensitive data stored and processed at these distributed locations requires robust security measures, including encryption, access control, and regular security audits. A breach at a single edge node could potentially compromise a significant amount of data, leading to serious consequences for both individuals and businesses. Consider the scenario of a smart city using edge computing for traffic management. A compromised edge node could lead to inaccurate traffic information, potentially causing accidents or traffic jams. Effective security protocols and continuous monitoring are essential to mitigate these risks.

Managing Data Security and Privacy in Edge Deployments

Addressing the security challenges requires a multi-pronged approach. Implementing strong encryption protocols for data both in transit and at rest is paramount. This ensures that even if data is intercepted, it remains unreadable without the correct decryption keys. Furthermore, robust access control mechanisms, including role-based access control (RBAC), are essential to limit access to sensitive data only to authorized personnel and applications. Regular security audits and penetration testing can help identify vulnerabilities and ensure the effectiveness of security measures. Finally, adhering to relevant data privacy regulations, such as GDPR, is crucial to maintain user trust and comply with legal requirements. This involves implementing mechanisms for data anonymization and user consent management. Companies need to invest in comprehensive security solutions and training programs to ensure their edge deployments are secure and compliant.

Cost Implications of Implementing and Maintaining Edge Computing Infrastructure

The initial investment in edge computing infrastructure can be substantial. This includes the cost of purchasing and deploying edge servers, networking equipment, and software. Ongoing maintenance and operational costs also contribute significantly to the overall expenditure. This includes costs associated with power consumption, cooling, security updates, and personnel to manage and maintain the infrastructure. For example, a large retail chain deploying edge computing for real-time inventory management would need to invest in a network of edge servers across its many stores, leading to substantial capital and operational expenses. Careful planning and a thorough cost-benefit analysis are essential before embarking on an edge computing project to ensure its financial viability.

Future Trends and Potential of Edge Computing for Internet Speed

The future of internet speed is inextricably linked to the continued evolution and expansion of edge computing. As more devices connect and demand for bandwidth increases exponentially, the limitations of centralized cloud computing become increasingly apparent. Edge computing, however, offers a pathway to overcome these hurdles, promising faster, more responsive, and more accessible internet for everyone. The advancements in AI, machine learning, and related technologies are poised to dramatically accelerate this progress.

The potential of edge computing to revolutionize internet speed rests on its ability to process data closer to the source, minimizing latency and maximizing efficiency. This shift towards decentralized processing has far-reaching implications, impacting everything from streaming services and online gaming to autonomous vehicles and industrial automation. The integration of advanced technologies will further amplify these benefits, paving the way for a truly ubiquitous and high-speed internet experience.

AI and Machine Learning’s Role in Optimizing Edge Computing for Speed

AI and machine learning are set to play a pivotal role in optimizing edge computing for speed. These technologies can analyze vast amounts of data from various sources to predict network congestion, optimize resource allocation, and dynamically adjust network configurations in real-time. For example, machine learning algorithms can analyze traffic patterns to anticipate peak usage periods and proactively allocate resources to prevent bottlenecks. This predictive capability minimizes latency and ensures consistent high-speed performance, even during periods of high demand. Imagine a self-driving car seamlessly navigating a busy intersection thanks to instantaneous data processing at the edge, a scenario impossible with the delays inherent in cloud-based processing.

Emerging Technologies Enhancing Edge Computing Capabilities

Several emerging technologies are poised to significantly enhance the capabilities of edge computing. The development of faster, more energy-efficient processors specifically designed for edge deployments is crucial. Improvements in 5G and 6G network infrastructure will also play a significant role, providing the necessary bandwidth and low latency connections to support the demands of edge computing. Furthermore, advancements in technologies like blockchain can enhance security and data integrity within edge networks, ensuring reliable and secure data processing at the edge. Imagine a smart city leveraging blockchain to securely manage traffic flow data processed at the edge, resulting in efficient and safe urban mobility.

Predictions on Edge Computing’s Future Role in Shaping Internet Speed and Accessibility

We can predict that edge computing will become increasingly integral to the internet’s infrastructure. By 2030, we anticipate a significant portion of data processing will occur at the edge, dramatically reducing latency and improving internet speed, especially in underserved areas. This shift will lead to the proliferation of new applications and services that rely on real-time data processing, such as augmented reality, virtual reality, and the Internet of Things (IoT). Access to high-speed internet will become more equitable, bridging the digital divide and empowering individuals and communities with access to information and opportunities previously unavailable. The impact will be akin to the transition from dial-up to broadband – a fundamental shift in how we interact with the digital world.

Potential Breakthroughs Revolutionizing Edge Computing’s Impact on Internet Speed

Several potential breakthroughs could dramatically accelerate the impact of edge computing on internet speed. These advancements will require collaborative efforts across various sectors, from hardware manufacturers to software developers and network operators.

Edge computing’s potential to boost internet speed is huge, especially with the rise of data-hungry smart devices. Think about how much faster your smart home would react if processing happened locally, not miles away in a cloud server. This efficiency is directly linked to the productivity gains we see from these devices, as detailed in this insightful article: How Smart Devices Are Enhancing Personal Productivity.

Ultimately, faster processing, thanks to edge computing, unlocks the full potential of our increasingly smart lives.

- Development of ultra-low-power, high-performance edge processors capable of handling complex computations with minimal energy consumption.

- Creation of highly efficient and secure data transmission protocols specifically optimized for edge networks.

- Advancements in AI and machine learning algorithms capable of predicting and adapting to network conditions in real-time, ensuring optimal performance under any circumstances.

- Integration of quantum computing capabilities into edge devices, enabling unprecedented processing power for complex tasks.

Case Studies

Real-world applications of edge computing are proving its transformative power in boosting internet speeds across various sectors. Let’s dive into some compelling examples showcasing the tangible benefits and diverse approaches employed. These case studies highlight not only the measurable improvements in internet speed but also the crucial factors contributing to their success.

Edge Computing in Online Gaming

The online gaming industry, with its high demand for low-latency connections, has been a significant early adopter of edge computing. Companies like Microsoft’s Azure and Amazon Web Services (AWS) have deployed edge servers closer to players, significantly reducing the distance data travels. This translates directly into smoother gameplay and reduced lag. For instance, a study by a major game developer showed a 70% reduction in latency for players located near newly deployed edge servers, resulting in a more responsive and enjoyable gaming experience. The improved speed led to a noticeable increase in player satisfaction and retention rates.

Edge Computing in Healthcare: Telemedicine Enhancement

The implementation of edge computing in telemedicine is revolutionizing remote patient monitoring and diagnostics. By processing medical data (like ECG readings or real-time video consultations) closer to the source (the patient’s home or clinic), edge computing minimizes the delay associated with transmitting data to distant servers. One notable example is a remote patient monitoring system that uses edge devices to analyze electrocardiograms in real-time. This allows for immediate detection of potentially life-threatening arrhythmias, leading to faster intervention and improved patient outcomes. The system experienced a 95% reduction in data transmission time compared to cloud-based solutions, allowing for critical alerts to reach medical professionals almost instantly.

Edge Computing in Manufacturing: Industrial IoT Optimization

In manufacturing, the Internet of Things (IoT) generates vast amounts of data from sensors and machines. Edge computing enables real-time analysis of this data at the factory floor, facilitating predictive maintenance and optimizing production processes. A large automotive manufacturer, for example, deployed edge devices to analyze data from its assembly line robots. This enabled real-time monitoring of robot performance and the prediction of potential malfunctions, resulting in a 30% reduction in downtime and a significant improvement in overall efficiency. The improved speed of data processing led to immediate adjustments in the production line, minimizing disruptions.

Comparison of Approaches and Success Factors

The successful implementation of edge computing across these diverse industries highlights several common success factors:

- Strategic deployment of edge servers: Proximity to end-users is paramount for minimizing latency.

- Robust network infrastructure: A reliable and high-bandwidth network is essential to support edge operations.

- Data security and privacy: Secure data handling and processing are crucial, especially in sensitive sectors like healthcare.

- Scalability and flexibility: The edge computing infrastructure must be able to adapt to changing demands.

- Integration with existing systems: Seamless integration with existing IT infrastructure is vital for a smooth transition.

While the specific approaches varied based on the industry and application, the core principle – bringing computation closer to the data source – remained consistent across all three case studies. The measurable improvements in internet speed and operational efficiency demonstrate the significant potential of edge computing to transform various sectors.

Final Wrap-Up

Source: evolutyz.com

The Potential of Edge Computing in Enhancing Internet Speed is undeniably immense. As 5G and other high-bandwidth networks mature, and AI further refines edge computing’s capabilities, we can anticipate a future where lag is a distant memory. From gaming to healthcare, the applications are vast and the benefits are clear: a faster, more responsive, and more efficient internet experience for all. The journey towards a truly seamless online world is underway, and edge computing is leading the charge.